I have a production web server with postgresql database.

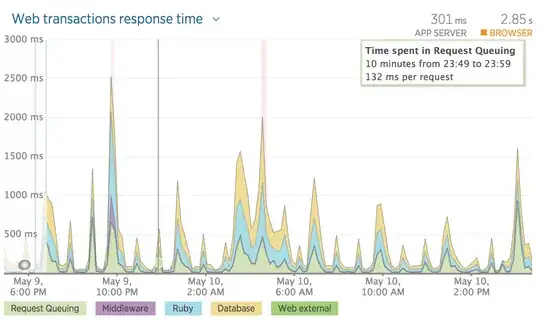

My server gets data from another server every hour on the hour. The other server wakes up and sends many requests to my server, each result in an insert/update to the postgresql database.

In order to avoid overloading my server, the requests are queued and handled one at a time.

So typically my server is doing (not many) reads from the database most of the time, and every hour on the hour it runs many inserts and update, one after the other.

The problem I'm experiencing is that once the updates/inserts start, the performance of the database gets very bad for a couple of minutes and then, even though the updates/inserts continue, the performance gets better and stay at a good level until the updates/inserts are finished.

I asked around and got some good directions that may explain the degradation in the performance of the webserver itself, but since I'm seeing that the database's performance also degrades, there might be more to this.

What can explain this behaviour? How can I fix this?