When decimals are divided, the precision and the scale of the result value are calculated due to this formula:

max precision = (p1 - s1 + s2) + MAX(6, s1 + p2 + 1) -- up to 38

max scale = MAX(6, s1 + p2 + 1)

What's the formula when decimals are multiplied?

For example:

IF OBJECT_ID('tempdb..#DataSource') IS NOT NULL

BEGIN

DROP TABLE #DataSource;

END;

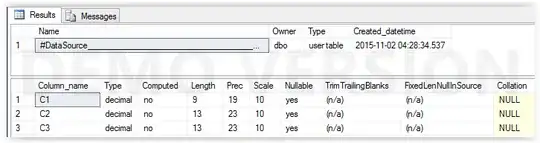

SELECT CAST(1 AS DECIMAL(9,0)) / CAST(2 AS DECIMAL(9,0)) AS C1

,CAST(1 AS DECIMAL(9,0)) / CAST(2 AS DECIMAL(9,0)) * 100 AS C2

,CAST(1 AS DECIMAL(9,0)) / CAST(2 AS DECIMAL(9,0)) * CAST(100 AS DECIMAL (3, 0)) AS C3

INTO #DataSource;

EXEC tempdb.dbo.sp_help '#DataSource';

The precision and the scale of column C1 are calculated based on the specified formula:

p1 = 9

p2 = 9

s1 = 0

s2 = 0

max precision = (9 - 0 + 0) + MAX(6, 0 + 9 + 1) --> 19

max scale = MAX(6, 0 + 9 + 1) --> 10

When this result is multiplied by 100 (the value 100 is converted to DECIMAL(3,0)), the result has precision is 23 and scale 10.

If the same formula is applied, the values are as follows:

p1 = 19

p2 = 3

s1 = 10

s2 = 0

max precision = (19 - 10 + 0) + MAX(6, 10 + 3 + 1) --> 9 + 14 = 23

max scale = MAX(6, 10 + 3 + 1) --> 14

So, the precision is correct, but the scale is bigger - 14 instead 10.

Could anyone tell what the formula is for calculating precision and scale when decimals are multiplied?