Imagine a stream of data that is "bursty", i.e. it could have 10,000 events arrive very quickly, followed by nothing for a minute.

Your expert advice: How can I write the C# insert code for SQL Server, such that there is a guarantee that SQL caches everything immediately in its own RAM, without blocking my app for more than it takes to feed data into said RAM? To achieve this, do you know of any patterns for setup of the SQL server itself, or patterns to set up the individual SQL tables I'm writing to?

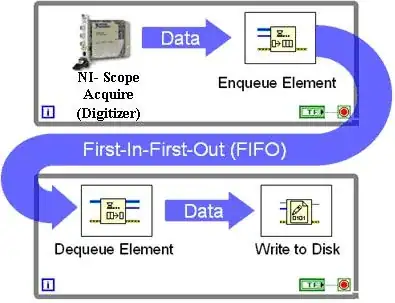

Of course, I could do my own version, which involves constructing my own queue in RAM - but I don't want to reinvent the Paleolithic Stone Axe, so to speak.