I have a table with 20M rows, and each row has 3 columns: time, id, and value. For each id and time, there is a value for the status. I want to know the lead and lag values of a certain time for a specific id.

I have used two methods to achieve this. One method is using join and another method is using the window functions lead/lag with clustered index on time and id.

I compared the performance of these two methods by execution time. The join method takes 16.3 seconds and the window function method takes 20 seconds, not including the time to create the index. This surprised me because the window function seems to be advanced while the join methods is brute force.

Here is the code for the two methods:

Create Index

create clustered index id_time

on tab1 (id,time)

Join method

select a1.id,a1.time

a1.value as value,

b1.value as value_lag,

c1.value as value_lead

into tab2

from tab1 a1

left join tab1 b1

on a1.id = b1.id

and a1.time-1= b1.time

left join tab1 c1

on a1.id = c1.id

and a1.time+1 = c1.time

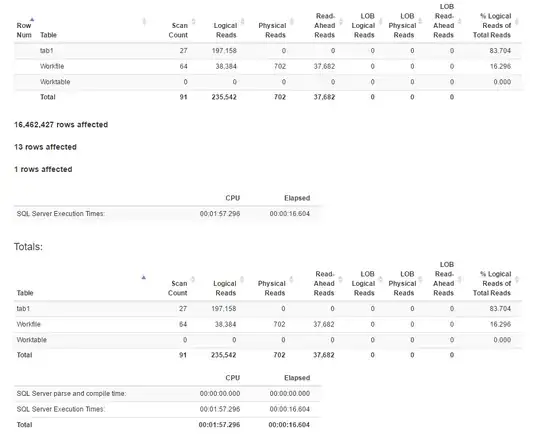

IO statistics generated using SET STATISTICS TIME, IO ON:

Here is the execution plan for the join method

Window Function method

select id, time, value,

lag(value,1) over(partition by id order by id,time) as value_lag,

lead(value,1) over(partition by id order by id,time) as value_lead

into tab2

from tab1

(Ordering only by time saves 0.5 seconds.)

Here is the execution plan for Window function method

IO statistics

[![Statistics for Window function method 4]](../../images/053117a4c729ad083684f73eac2d84da.webp)

I checked the data in sample_orig_month_1999 and it seems that the raw data is well ordered by id and time. Is this the reason of performance difference?

It seems that the join method has more logical reads than the window function method, while the execution time for the former is actually less. Is it because the former has a better parallelism?

I like the window function method because of the concise code, is there any way to speed it up for this specific problem?

I'm using SQL Server 2016 on Windows 10 64 bit.