Question:

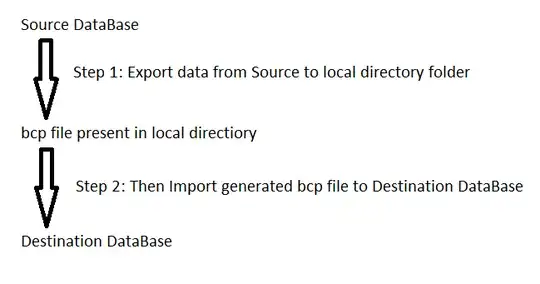

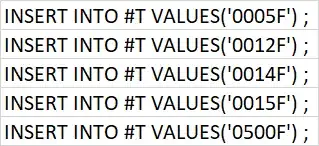

I have a script with around 45 thousand insert from select statements. When I try and run it, I get an error message stating that I have run out of memory. How can I get this script to run?

Context:

- Added some new data fields to make an app play nice with another app the client uses.

- Got a spreadsheet of data from the client full of data that mapped current data items to values for these new fields.

- Converted spreadsheet to insert statements.

- If I only run some of the statements it works but the entire script does not.

- No. There are no typos.

If there is a different way I should be loading this data feel free to chastise me and let me know.