I am a SQL Developer (not DBA or Architect) for a small (~50 employees) SaaS company. I am tasked with figuring out how to:

- Offload operational reporting from our 100+ OLTP databases

- Allow those reports to run against data from multiple client databases

- Position our company to provide more analytics-based solutions in the future

I have read a number of articles on various technologies like transactional replication (specifically the many-to-one/central subscriber model), SQL service broker, log shipping, Change Tracking (CT), and Change Data Capture (CDC, my understanding is this is enterprise-only), and I am not sure what path is best to pursue.

I am hoping some of you with integration expertise may have encountered a setup similar to ours and be able to point me down a successful path or direct me to some resources that would be helpful.

Due to cost constraints, our solution must work within SQL Server Standard Edition. Also, the solution must be reasonable to support/maintain within our small organization.

Basic configuration:

We currently have 100+ individual client databases, most deployed on SQL servers at our data center, but some deployed on client servers within their data center that we can remote into. These are all SQL Server 2008 R2 databases, but we are planning to upgrade to SQL 2016 soon.

We use database projects and dacpacs to ensure the schema is the same across all client databases that would be integrated. However, since we do not force all clients to upgrade to new versions at the same time, some schema differences are possible between upgrades. The solution must be flexible enough not to break if client A is on software version 1.0 and client B is on version 1.1.

Operational reports are currently run directly from each client's OLTP database. We are concerned about the impact this will have on the application's performance if we do not offload it.

High-Level Requirements:

Our clients are hospital sterile processing departments (SPD’s) who want up-to-the-moment reports on what they’ve processed so far, where inventory is, etc. SPD's process inventory around the clock, including weekends and holidays. Since one of the main purposes of this effort is to better support operational reporting, we would like the data to be as close to real-time as possible to continue meeting clients’ needs.

Currently we have some SPD’s in separate databases that are actually part of the same hospital system. These clients want the ability to report against all the SPD’s in their system.

Strategically speaking, we would like the ability to easily aggregate data across all our clients to support our internal analytics initiatives. Our expectation is that we would be able to use the collected operational data as a source for data marts/warehouse.

Thoughts so far:

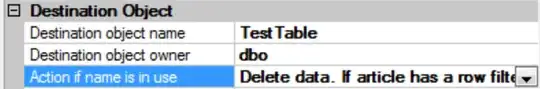

Transactional replication seems like it would provide the most "real-time" solution. I found this response to be especially helpful, but I am concerned that with the potential for schema differences it will not work for us: SQL Server Many-to-One replication

Log shipping doesn't sound ideal given that the log cannot restore while queries are active. I either have to kick everyone out so the log can restore or the data will become stale. I am unclear as to whether this method could be used to centralize data from multiple databases, since each shipped log would only be for the individual database it came from.

Using SQL service broker, latency may be unpredictable if a queue were unable to keep up with the number of messages to process.

CT only identifies a version for each table row. Latency would be dependent on how quickly we could process something like an SSIS package against each database to retrieve the data and insert it in a central repository.

Do we need to consider replicating each database individually and then perhaps use some sort of data virtualization technique to combine data from the various replicated sources?

Any advice or direction you are willing to provide would be greatly appreciated.