<TL;DR>

Definitive answer based on criteria outlined below. The discussion below refers to minor differences in the criteria for the selection of records (don't include NULLs) and their ordering - I finally used the OP's ordering and not the one discussed in the analysis below this section.

SELECT

name, MAX(rn2) AS cnt, fv_sd, lv_sd

FROM

(

SELECT

*,

FIRST_VALUE(p_date) OVER (PARTITION BY sd ORDER BY sd) AS fv_sd,

LAST_VALUE(p_date) OVER (PARTITION BY sd ORDER BY sd) AS lv_sd,

ROW_NUMBER() OVER (PARTITION BY sd ORDER BY rn) AS rn2

FROM

(

SELECT

rn, name, lgn, c_diff,

SUM(c_diff) OVER (ORDER BY rn, name) AS sd,

p_date

FROM

(

SELECT

rn,

name, LAG(name) OVER (ORDER BY rn) AS lgn,

CASE

WHEN name = LAG(name) OVER (ORDER BY rn)

THEN 0

ELSE 1

END AS c_diff,

p_date

FROM test

-- ORDER BY rn

) AS t_01

-- ORDER BY rn

) AS t_02

-- ORDER BY rn

) AS t_03

GROUP BY name, fv_sd, lv_sd

HAVING MAX(rn2) > 1

ORDER BY cnt DESC, fv_sd, lv_sd, name;

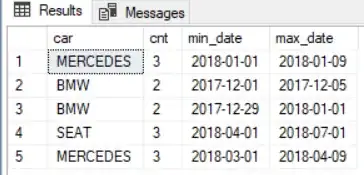

Result (on PostgreSQL and SQL Server):

name cnt fv_sd lv_sd

MERCEDES 3 2018-01-01 2018-01-09

MERCEDES 3 2018-03-01 2018-04-09

SEAT 3 2018-04-01 2018-07-01

BMW 2 2017-12-01 2017-12-05

BMW 2 2017-12-29 2018-01-01

</TL;DR>

I looked at this and came up with the following solution, which unlike all the others doesn't make use of CTEs. All of the code below can be found on the fiddle here.

My answer is as follows - I'm going to go through my logic, partly to explain it to you, and partly to explain it to myself! :-)

Zeroth Step:

Right off the bat, we have to decide what we are doing with NULLs in the name field - I've decided to eliminate them - we don't know what they are, and absent any information from the OP, we can only speculate. I therefore will not INSERT records with NULL names.

I'm using the OP's original INSERTion order - again, absent any other information, we can't know any better!

First step:

I created the table as follows:

CREATE TABLE test

(

name VARCHAR (8),

p_date DATE

);

using the OP's original data - minus the `NULL's:

INSERT INTO test (name, p_date)

VALUES

('MERCEDES', '2018-01-01'),

('SEAT', '2018-02-01'),

('MERCEDES', '2018-04-01'),

('BMW', '2018-01-01'),

...

... snipped for brevity

...

Second step:

The details from here on differ slightly from the ones outlined in the <TL;DR> definitive section above - the general points about the workings of the SQL still apply - it's just the actual results which differ from above.

Establish an order for the records - this has been pointed out by other posters. I have gone down a different route for numbering - I've used the tuple (date, name) as the ordering criterion - this gives me slightly different results (naturally), but you can adjust this as you will!

SELECT * FROM test ORDER BY p_date, name;

Result:

name p_date

BMW 2017-01-01

AUDI 2017-12-01

BMW 2017-12-01

SEAT 2017-12-01

BMW 2017-12-05 -- <--- Note the sequence of 5 BMWs.

BMW 2017-12-29

BMW 2018-01-01

BMW 2018-01-01

BMW 2018-01-01

MERCEDES 2018-01-01

...

... snipped for brevity

...

Third step:

SELECT

ROW_NUMBER() OVER (ORDER BY p_date, name) AS rn,

name, LAG(name) OVER (ORDER BY p_date, name),

CASE

WHEN name = LAG(name) OVER (ORDER BY p_date, name) THEN 0

ELSE 1

END AS c_diff,

p_date

FROM test

ORDER BY p_date;

Result:

rn name lag c_diff p_date

1 BMW NULL 1 2017-01-01

2 AUDI BMW 1 2017-12-01

3 BMW AUDI 1 2017-12-01

4 SEAT BMW 1 2017-12-01

5 BMW SEAT 1 2017-12-05

6 BMW BMW 0 2017-12-29

7 BMW BMW 0 2018-01-01

What this does is establish the sequence of records by means of the ROW_NUMBER() function (see the PostgreSQL tutorial here & a more comprehensive list here). Window functions are extremely powerful and well worth putting in the effort to get to know!

So, now we have a rn column associated with our data but there's more to window functions - the LAG() function (see also the related LEAD()) which in conjunction with the CASE expression allows us to start to discriminate between groups of cars. We can see that for every change of car make, there is a 1 and for every time the next car is of the same make as the procediing one, there is a 0.

Step 4:

We use the result of step 3 as a subquery as follows:

SELECT

rn, name, lgn, c_diff,

SUM(c_diff) OVER (ORDER BY rn, name) AS sd,

p_date

FROM

(

SELECT

ROW_NUMBER() OVER (ORDER BY p_date, name) AS rn,

...

... subquery snipped for brevity

...

Result:

rn name lgn c_diff sd p_date

1 BMW 1 1 2017-01-01

2 AUDI BMW 1 2 2017-12-01

3 BMW AUDI 1 3 2017-12-01

4 SEAT BMW 1 4 2017-12-01

5 BMW SEAT 1 5 2017-12-05 -- <-- note 5

6 BMW BMW 0 5 2017-12-29 -- "

7 BMW BMW 0 5 2018-01-01 -- "

...

... results snipped for brevity

...

So, we can see that by taking the cumulative sum, we obtain a way of grouping the cars in the way that we want. We have 5 as the cumulative sum (not related to the fact that there are 5 BMWs - for the 3 Mercs, the cumulative sum is 8).

5th step:

We now establish the first and last dates of sale for the groups of vehicles using the FIRST_VALUE() and LAST_VALUE() window functions (see, they are really important!):

SELECT

*, -- * is NOT best practice! SQL can be cleaned up here - left for demonstration

FIRST_VALUE(p_date) OVER (PARTITION BY sd) AS fv_sd,

LAST_VALUE(p_date) OVER (PARTITION BY sd) AS lv_sd,

ROW_NUMBER() OVER (PARTITION BY sd ORDER BY rn) AS rn2

FROM

(

SELECT

rn, name, lgn, c_diff,

...

... subquery snipped for brevity

...

Results (only shown for the group of 5 BMWs):

rn name lgn c_diff sd p_date fv_sd lv_sd rn2

5 BMW SEAT 1 5 2017-12-05 2017-12-05 2018-01-01 1

6 BMW BMW 0 5 2017-12-29 2017-12-05 2018-01-01 2

7 BMW BMW 0 5 2018-01-01 2017-12-05 2018-01-01 3

8 BMW BMW 0 5 2018-01-01 2017-12-05 2018-01-01 4

9 BMW BMW 0 5 2018-01-01 2017-12-05 2018-01-01 5

So, we can see that we now have the first date of a purchase for a group and the last date of purchase in a group.

Step 6 (final):

We use the result of Step 5 (again, as a subquery) to get the final result:

SELECT

name, MAX(rn2) AS mrn2, fv_sd, lv_sd

FROM

(

SELECT

name, rn, p_date,

FIRST_VALUE(p_date) OVER (PARTITION BY sd) AS fv_sd,

...

... subquery snipped for brevity

...

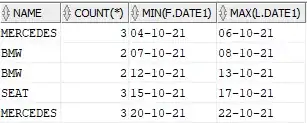

Result (final & full):

name mrn2 fv_sd lv_sd

BMW 5 2017-12-05 2018-01-01

MERCEDES 2 2018-01-01 2018-01-01

MERCEDES 3 2018-01-05 2018-01-09

SEAT 2 2018-05-01 2018-07-01

It's not the same as for the others but, as mentioned, I used different ordering criteria for establishing the value of ROW_NUMBER().

Analysis of performance (EXPLAIN (ANALYZE, BUFFERS, VERBOSE) for all:

- the tests were all done using a table sorted on

date and name in that order so that the playing field would be level!

My solution:

Planning Time: 0.154 ms

Execution Time: 0.257 ms -- typical ~ 0.260

54 rows

@Ronie's solution:

Planning Time: 0.134 ms

Execution Time: 0.269 ms -- typical ~ 0.260

40 rows

@Maheshwaran's solution:

Planning Time: 0.297 ms

Execution Time: 0.379 ms -- typical ~ .390

78 rows

@BłażejCiesielski's solution:

Planning Time: 0.301 ms

Execution Time: 0.388 ms -- typical ~ .400

78 rows

I was suprised to see that my solution was as performant as @Ronie's - puzzled, because my EXPLAIN has more steps...

Obviously a performance analysis based on 23 records on a server over which you have no control is not a great basis for a serious analysis - I would urge anyone undertaking this to do it on their own h/ware with their own normal load.

Plus, the performance analysis isn't SQL Server. The SQL Server solution is here - I could set up profiling, but it's been a long day! :-)

it took me a long time to "port" my PostgreSQL solution to SQL Server, but I learnt a lot doing it - about ORDERing and NULLs!

+1 for an interesting question - I learnt a lot! And +1 to @Ronie for a very clever solution!