As Brent has quoted there, one of the key differences is scale.

If your calculation is embarrassingly parallel, i.e. each row can be computed independently of one another, then using sp_execute_external_script with @parallel = 1 will allow you run your R calculations in parallel easily. Note that it is still possible to bite off more than you can chew and run out of memory, in which case you can add @params = N'@r_rowsPerRead INT', @r_rowsPerRead = N where N is the maximum amount of rows you would want to read in one go.

Using the above I have been able to run an R calculation against 2 billion rows of data in less than 10 minutes (obviously your mileage may vary).

If you need more control of the batching, such as ensuring appropriate rows are grouped together then I think you can achieve this by calling the RevoScaleR functions from within your R script directly, but I have not toyed with this.

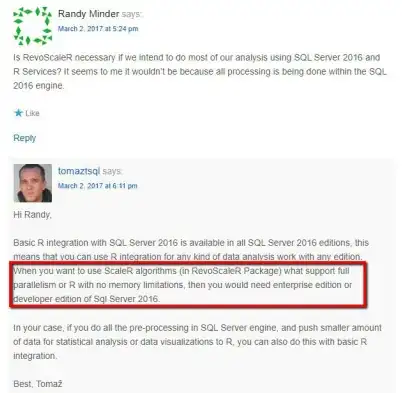

If you absolutely have to use Standard Edition then you can get creative with batching up the data yourself, but when I tried this I found the code would get quite complex and was also not as quick as taking advantage of Enterprise Edition streaming.

More info here: https://learn.microsoft.com/en-us/sql/relational-databases/system-stored-procedures/sp-execute-external-script-transact-sql?view=sql-server-2017

Note SSDT does not support GRANT EXECUTE ANY EXTERNAL SCRIPT so if you are using this for deployments then things become painful. I've raised this as an issue on user voice https://feedback.azure.com/forums/908035-sql-server/suggestions/32896864-grant-execute-any-external-script-not-recognised-b .