I upgraded a Postgres DB 9.3.2-->10.5 using pg_upgrade (in place). I did everything according to the documentation and the instructions given by pg_upgrade. Everything went fine but then I realized that the indexes were not being used in one of the tables (maybe others are affected too).

So I started an ANALYZE on that table yesterday which is still running (for over 22h)...!

The question: Is it normal for ANALYZE to have such a long execution time?

The table contains about 30M records. The structure is:

CREATE TABLE public.chs_contact_history_events (

event_id bigint NOT NULL

DEFAULT nextval('chs_contact_history_events_event_id_seq'::regclass),

chs_id integer NOT NULL,

event_timestamp bigint NOT NULL,

party_id integer NOT NULL,

event integer NOT NULL,

cause integer NOT NULL,

text text COLLATE pg_catalog."default",

timestamp_offset integer,

CONSTRAINT pk_contact_history_events PRIMARY KEY (event_id)

);

ALTER TABLE public.chs_contact_history_events OWNER to c_chs;

CREATE INDEX ix_chs_contact_history_events_chsid

ON public.chs_contact_history_events USING hash (chs_id)

TABLESPACE pg_default;

CREATE INDEX ix_chs_contact_history_events_id

ON public.chs_contact_history_events USING btree (event_id)

TABLESPACE pg_default;

CREATE INDEX ix_history_events_partyid

ON public.chs_contact_history_events USING hash (party_id)

TABLESPACE pg_default;

UPDATE:

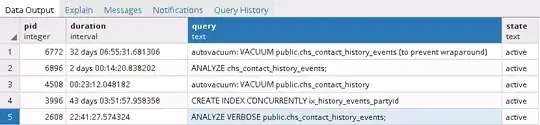

I ran the query below in order to get the currently running processes and got a more than interesting results:

SELECT pid, now() - pg_stat_activity.query_start AS duration, query, state

FROM pg_stat_activity

WHERE (now() - pg_stat_activity.query_start) > interval '5 minutes'

AND state = 'active';

It seems that the maintenance tasks and the concurrent recreation of the index table are frozen!

So the next question: is it safe to cancel those processes? And what to do next? IMO stopping them all and restarting index creation will be necessary but I'm unsure.

ANNEX 1

Possibly related errors corrected in v9:

9.3.7 and 9.4.2 Fix possible failure during hash index bucket split, if other processes are modifying the index concurrently

9.3.18 and 9.4.13 and 9.5.8 and 9.6.4 Fix low-probability corruption of shared predicate-lock hash table in Windows builds

9.5.4 Fix building of large (bigger than shared_buffers) hash indexes The code path used for large indexes contained a bug causing incorrect hash values to be inserted into the index, so that subsequent index searches always failed, except for tuples inserted into the index after the initial build.

Possibly related errors corrected in v10:

10.2 Fix failure to mark a hash index's metapage dirty after adding a new overflow page, potentially leading to index corruption

Prevent out-of-memory failures due to excessive growth of simple hash tables

And last but not least that makes me concerns (since an upgrade seems to be not realistic on the productive environment):

10.6 Avoid overrun of a hash index's metapage when BLCKSZ is smaller than default

Fix missed page checksum updates in hash indexes

ANNEX 2

Upgrade instruction in v10:

Hash indexes must be rebuilt after pg_upgrade-ing from any previous major PostgreSQL version

Major hash index improvements necessitated this requirement. pg_upgrade will create a script to assist with this.

Note that I ran that script of course at the time of upgrade.