I have a simple setup:

- 2 Mongos

- 3 Shards, deployed as a Replica Set

- 3 Config Servers, deployed as a Replica Set

Each replica set consists of 1 Primary, 1 Secondary and 1 Arbiter. (Except the Config Servers, they don't contain an arbiter).

Beside authentication and --enableMajorityReadConcern false they are using MongoDB Defaults. I am using as well the latest MongoDB 4.2.1.

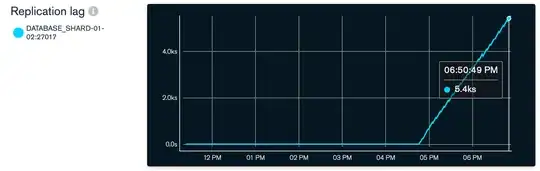

When not enabling sharding everything works smoothly. As soon as I enable sharding on a large collection with around 22.000.000 documents that have an avg. size of 400 Bytes the cluster comes to a halt with CPU growing to 100% and the primary shard mongod instances becoming unresponsive after some time. On the primary shard it seems like it can't keep up with the replication of the deletion, and the replication lag just grows linear without ever decreasing:

I can solve this issue temporarily by removing the secondary from the replica set and therefore basicaly disabling replication.

But now I get a second problem:

90% of my chunk migrations fail with error 'aborted'

balancer:

Currently enabled: yes

Currently running: yes

Collections with active migrations:

database.accounts.events started at Fri Dec 13 2019 07:30:09 GMT+0000 (UTC)

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

53 : Success

213 : Failed with error 'aborted', from shard1rs to shard2rs

293 : Failed with error 'aborted', from shard1rs to shard3rs

If I check the logs on the primary shard I see this:

2019-12-12T23:15:38.566+0000 I SHARDING [conn31] about to log metadata event into changelog: { _id: "52298fb18f9a:27017-2019-12-12T23:15:38.566+0000-5df2ca1a1c63e734d172a588", server: "52298fb18f9a:27017", shard: "shard1rs", clientAddr: "10.0.1.51:49388", time: new Date(1576192538566), what: "moveChunk.from", ns: "database.accounts.events", details: { min: { subscriberId: -6537969095477673617 }, max: { subscriberId: -6462537856126426178 }, step 1 of 6: 0, step 2 of 6: 41, step 3 of 6: 288, step 4 of 6: 80046, step 5 of 6: 381, to: "shard2rs", from: "shard1rs", note: "aborted" } }

On the secondary shard I get this:

2ca251c63e734d172a59c", $clusterTime: { clusterTime: Timestamp(1576192645, 12), signature: { hash: BinData(0, D068DBD91F1BF143500228C2519B6B920D4A356B), keyId: 6768021890377187357 } }, $configServerState: { opTime: { ts: Timestamp(1576192637, 1), t: 12 } }, $db: "admin" } numYields:0 reslen:727 locks:{} protocol:op_msg 417ms

2019-12-12T23:17:26.283+0000 I SHARDING [migrateThread] about to log metadata event into changelog: { _id: "529b18972649:27017-2019-12-12T23:17:26.283+0000-5df2ca8651ba8c152979cf93", server: "529b18972649:27017", shard: "shard2rs", clientAddr: "", time: new Date(1576192646283), what: "moveChunk.to", ns: "database.accounts.events", details: { min: { subscriberId: -6537969095477673617 }, max: { subscriberId: -6462537856126426178 }, step 1 of 6: 27, step 2 of 6: 19895, step 3 of 6: 76051, step 4 of 6: 14, note: "aborted", errmsg: "aborted" } }

2019-12-12T23:17:26.331+0000 I SHARDING [migrateThread] migrate failed: IllegalOperation: _transferMods failed in STEADY STATE: :: caused by :: No active migrations were found for collection database.accounts.events

2019-12-12T23:17:26.331+0000 I SHARDING [migrateThread] Abandoning in-migration of database

.accounts.events range [{ subscriberId: -6537969095477673617 }, { subscriberId: -6462537856126426178 }); scheduling deletion of any documents already copied

I couldn't find anything for the error No active migrations were found for collection. But I almost every single migration (90%) ends up with this error.

Here are the server sharding statistics for each shard. What I find particular interesting is the huge number of countStaleConfigErrors and the big discrepancy on Shard 1 between countDocsClonedOnDonor which is 29.346.060 while countDocsDeletedOnDonor is only 1.892.432. So the data has been many times copied over but only 6% of it was actually successfully acknowledged by the receiving shards.

Shard 1:

"shardingStatistics" : {

"countStaleConfigErrors" : NumberLong(7),

"countDonorMoveChunkStarted" : NumberLong(384),

"totalDonorChunkCloneTimeMillis" : NumberLong(33403337),

"totalCriticalSectionCommitTimeMillis" : NumberLong(2218),

"totalCriticalSectionTimeMillis" : NumberLong(118467),

"countDocsClonedOnRecipient" : NumberLong(0),

"countDocsClonedOnDonor" : NumberLong(29346060),

"countRecipientMoveChunkStarted" : NumberLong(0),

"countDocsDeletedOnDonor" : NumberLong(1892432),

"countDonorMoveChunkLockTimeout" : NumberLong(353),

"catalogCache" : {

"numDatabaseEntries" : NumberLong(2),

"numCollectionEntries" : NumberLong(2),

"countStaleConfigErrors" : NumberLong(1),

"totalRefreshWaitTimeMicros" : NumberLong(23860275),

"numActiveIncrementalRefreshes" : NumberLong(0),

"countIncrementalRefreshesStarted" : NumberLong(541),

"numActiveFullRefreshes" : NumberLong(0),

"countFullRefreshesStarted" : NumberLong(2),

"countFailedRefreshes" : NumberLong(0)

}

}

Shard 2:

"shardingStatistics" : {

"countStaleConfigErrors" : NumberLong(17),

"countDonorMoveChunkStarted" : NumberLong(0),

"totalDonorChunkCloneTimeMillis" : NumberLong(0),

"totalCriticalSectionCommitTimeMillis" : NumberLong(0),

"totalCriticalSectionTimeMillis" : NumberLong(0),

"countDocsClonedOnRecipient" : NumberLong(17694423),

"countDocsClonedOnDonor" : NumberLong(0),

"countRecipientMoveChunkStarted" : NumberLong(231),

"countDocsDeletedOnDonor" : NumberLong(16392678),

"countDonorMoveChunkLockTimeout" : NumberLong(0),

"catalogCache" : {

"numDatabaseEntries" : NumberLong(2),

"numCollectionEntries" : NumberLong(2),

"countStaleConfigErrors" : NumberLong(1),

"totalRefreshWaitTimeMicros" : NumberLong(10966428),

"numActiveIncrementalRefreshes" : NumberLong(0),

"countIncrementalRefreshesStarted" : NumberLong(372),

"numActiveFullRefreshes" : NumberLong(0),

"countFullRefreshesStarted" : NumberLong(2),

"countFailedRefreshes" : NumberLong(0)

}

}

Shard 3:

"shardingStatistics" : {

"countStaleConfigErrors" : NumberLong(17),

"countDonorMoveChunkStarted" : NumberLong(0),

"totalDonorChunkCloneTimeMillis" : NumberLong(0),

"totalCriticalSectionCommitTimeMillis" : NumberLong(0),

"totalCriticalSectionTimeMillis" : NumberLong(0),

"countDocsClonedOnRecipient" : NumberLong(11880556),

"countDocsClonedOnDonor" : NumberLong(0),

"countRecipientMoveChunkStarted" : NumberLong(156),

"countDocsDeletedOnDonor" : NumberLong(10589408),

"countDonorMoveChunkLockTimeout" : NumberLong(0),

"catalogCache" : {

"numDatabaseEntries" : NumberLong(2),

"numCollectionEntries" : NumberLong(2),

"countStaleConfigErrors" : NumberLong(1),

"totalRefreshWaitTimeMicros" : NumberLong(9441699),

"numActiveIncrementalRefreshes" : NumberLong(0),

"countIncrementalRefreshesStarted" : NumberLong(298),

"numActiveFullRefreshes" : NumberLong(0),

"countFullRefreshesStarted" : NumberLong(2),

"countFailedRefreshes" : NumberLong(0)

}

}