I have a heap with over a million inserts daily. This table is a "staging table" where messages are received and sent to differens queues that are processed by the application.

A stored proc named dbo.leg_msgs does an UPDATE (show plan https://www.brentozar.com/pastetheplan/?id=ryw9SDGoD) on this table, using an covered index with columns starting from most selective to least selective.

CREATE TABLE [dbo].[leg](

[guid_operacao] [uniqueidentifier] NOT NULL,

[dt_hr_entrada] [datetime] NOT NULL,

[dt_hr_envio] [datetime] NULL,

[id_fila] [int] NULL,

[str_protocolo] [text] NOT NULL,

[flg_enviar] [char](1) NOT NULL,

[nr_ctrl_if] [char](20) NULL,

[id_legado] [int] NULL,

[tp_mensagem] [int] NOT NULL,

[flag_sentido] [char](1) NULL,

[flg_proc_util] [char](1) NOT NULL,

[guid_protocolo] [uniqueidentifier] NOT NULL,

[str_protocolo_enviada] [text] NULL,

[fl_montou_protocolo] [char](1) NULL,

[dt_hr_lock] [datetime] NOT NULL,

[guid_lock] [uniqueidentifier] NOT NULL,

[dt_hr_ok_leg] [datetime] NULL,

[flg_ret_legado] [bit] NOT NULL,

[flg_enviada] [bit] NOT NULL,

[dt_hr_legado] [datetime] NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

GO

Stored proc:

CREATE PROCEDURE [dbo].[leg_msgs] (

@pTipoMensagem INT = NULL,

@pIntegracaoViaSQL CHAR(1) = 'N',

@pIdFila INT = NULL

)

AS

BEGIN

SET NOCOUNT ON

DECLARE @guid_operacao char(36),

@guid_protocolo char(36),

@dt_hr_entrada datetime,

@dt_ini datetime,

@dt_fim datetime,

@ds_strong_id varchar(max),

@str_protocolo varchar(max),

@cd_msg varchar(10),

@nm_fila varchar(100),

@id_tag_numctrl varchar(20),

@id_status_matera varchar(5),

@evento_id varchar(10),

@dt_hr_entrada_str varchar(50),

@nm_tag_sit_lanc varchar(200),

@nm_proc_montagem_protocolo varchar(100),

@strSQL nvarchar(1000),

@dt_movto varchar(10),

@tempo_espera numeric(20, 10),

@dt_hr_lock datetime,

@guid_lock char(36),

@montou_prot char(1),

@id_fila_rep int,

@qtd_regs int,

@bol_delete bit

-- Valores para @tempo_espera

-- 0.00069445 (00:02:00)

-- 0.00104167 (00:01:30)

-- 0.00069445 (00:01:00)

-- 0.00034724 (00:00:30)

SET @tempo_espera = 0.00069445

SET @dt_movto = CONVERT(varchar(10), getdate(), 112)

SET @dt_hr_lock = getdate()

SET @guid_lock = newid()

SET @dt_ini = CAST(@dt_movto + ' 00:00' AS datetime)

SET @dt_fim = CAST(@dt_movto + ' 23:59' AS datetime)

SELECT @qtd_regs = 1

FROM dba.dbo.leg WITH (NOLOCK)

WHERE tp_mensagem = @pTipoMensagem

AND dt_hr_entrada BETWEEN @dt_ini AND @dt_fim

AND flg_enviar = 'S'

AND flg_proc_util = 'N'

AND id_fila = @pIdFila

AND dt_hr_lock <= CONVERT(varchar(23), getdate() - @tempo_espera, 121)

OPTION (RECOMPILE)

IF @@ROWCOUNT <> 0 BEGIN

BEGIN TRANSACTION

UPDATE dba.dbo.leg WITH (ROWLOCK READPAST)

SET dt_hr_lock = '19000101',

guid_lock = '00000000-0000-0000-0000-000000000000'

WHERE tp_mensagem = @pTipoMensagem

AND dt_hr_entrada BETWEEN @dt_ini AND @dt_fim

AND flg_enviar = 'S'

AND flg_proc_util = 'N'

AND id_fila = @pIdFila

AND dt_hr_lock <= CONVERT(varchar(23), getdate() - @tempo_espera, 121)

IF @@ERROR = 0 BEGIN

COMMIT TRANSACTION

END

ELSE BEGIN

ROLLBACK TRANSACTION

RETURN

END

END

SET NOCOUNT OFF

END

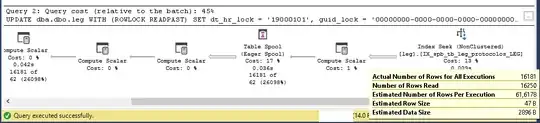

Normally it runs quickly (about 30-50ms), but if the number of rows returned gets above 5000, it starts running above 100-200ms.

My first approach was to rebuild table and indexes, but SQL Server is still missing estimates. The screenshot below is after the rebuild.

CPU time = 0 ms, elapsed time = 0 ms. Table 'leg'. Scan count 1, logical reads 16321, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. Table 'Worktable'. Scan count 1, logical reads 32676, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Any thoughts?

edit:

SQL Server 2017, but my current COMPATIBILITY_LEVEL = 110

As a test, I created a clustered index, rebuild everything but still getting bad estimates.

alter table leg add id bigint identity(1,1)

create clustered index ix_id on leg (id)

alter index all on dbo.leg rebuild

CPU time = 0 ms, elapsed time = 5 ms. Table 'leg'. Scan count 1, logical reads 49703, physical reads 0, read-ahead reads 30, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. Table 'Worktable'. Scan count 1, logical reads 32724, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

New plan: https://www.brentozar.com/pastetheplan/?id=rkZ6mtzoP

**Helpful **links related suggested by @NikitaSerbskiy

Why does SQL Server use a better execution plan when I inline the variable? Why You’re Tuning Stored Procedures Wrong (the Problem with Local Variables)