I am creating a stored procedure in sql server 2019 that needs to use multiple select statements to get a parent row and then its related data. I have the primary key clustered value for the parent so the first query will at most return 1 row.

Should I select everything into a temp table for the first query and then join my subsequent queries to the temp or should I just keep joining to the original table?

I am not sure if the overhead of creating the temp table will overshadow the overhead of joining to the actual table repeatedly.

I have looked at the performance plans and they come out to be the same and the statistics for reads/scans and time are about the same as well.

I think what I am trying to figure out is if I use the temp table, will it relieve pressure on the original table. The original table is heavily read and written to.

I should note that these statements will be inside of a Stored Procedure so I may potentially get a boost from Temporary Object Caching.

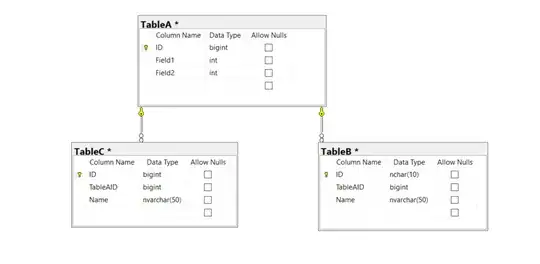

Assume table A has > 1 million rows and has 0-10 rows per entry in TableB and 0-10 per entry in TableC

Queries without temp table

declare @taID bigint=123

select

ta.*

from

TableA ta

where

ta.ID=@taID

and ta.Field1>1

and ta.Field2<1000

select

tb.*

from

TableA ta

inner join TableB tb on ta.ID=tb.TableAID

where

ta.ID=@taID

and ta.Field1>1

and ta.Field2<1000

select

tc.*

from

TableA ta

inner join TableC tc on ta.ID=tc.TableAID

where

ta.ID=@taID

and ta.Field1>1

and ta.Field2<1000

Queries with temp table

declare @taID bigint=123

select *

into #tmpA

from

TableA ta

where

ta.ID=@taID

and ta.Field1>1

and ta.Field2<1000

select * from #tmpA

select

tb.*

from

#tmpA ta

inner join TableB tb on ta.ID=tb.TableAID

select

tc.*

from

#tmpA ta

inner join TableC tc on ta.ID=tc.TableAID