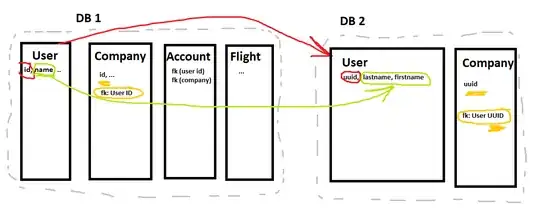

I need to migrate data from a relatively small PostgreSQL database (let's call it DB 1) of a Django REST Framework project to another PostgreSQL database of my FastAPI project (DB 2). Say 500,000 rows.

The tables are slightly different. DB 1 uses IDs as pk, while the second uses UUIDs. There are fk linking the tables.

So, I have to move some Users after changing the ID to UUID, and the name to last/first name.

The DBs are on different servers.

Both databases will be running in parallel for a few months, but traffic will be low. And finally, I will be moving some users initially and later on the rest.

I was thinking of adding columns in DB1 (which is in production) and expose an endpoint for the transfer. My 2nd project will then call the endpoint in my 1st and request the transformed data. Added columns:

- UUIDs,

- Last Name, First Name,

- "Already transferred to DB2"

The new columns in DB1 will only be used for the migration. Nothing else.

The benefits are:

- Single(ish) source of truth

- less bug prone since I'll be using Django (models, Fields, etc.)

Cons:

- I am editing production code and production DB

Is that the right way to do it? Is there a better way?

Note: I have already implemented it as an external script that connects directly to the DBs (SQLAlchemy) and transforms the data (Pandas), but I fear it will become too complex to handle eventually.