Introduction

I have a PostgreSQL table setup as a queue/event-source.

I would very much like to keep the "order" of the events (even after the queue item has been processed) as a source for e2e testing.

I starting to run into query performance slow-downs (probably because of table bloat) and I don't know how to effectively query a table on a changing key.

Initial Setup

Postgres: v15

Table DDL

CREATE TABLE eventsource.events (

id serial4 NOT NULL,

message jsonb NOT NULL,

status varchar(50) NOT NULL,

createdOn timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

CONSTRAINT events_pkey PRIMARY KEY (id)

);

CREATE INDEX ON eventsource.events (createdOn)

Scrape Query (Pseudo Code)

BEGIN; -- Start transaction

SELECT message, status

FROM eventsource.events ee

WHERE status = 'PENDING'

ORDER BY ee.createdOn ASC

FOR UPDATE SKIP LOCKED

LIMIT 10; -- Get the OLDEST 10 events that are pending

-- I found that having a batch of work items was more performant than taking 1 at a time.

...

-- The application then uses the entries as tickets for doing work as in "I am working on these 10 items, no one else touch"

...

UPDATE ONLY eventsource.events SET status = 'DONE' WHERE id = $id_1

UPDATE ONLY eventsource.events SET status = 'DONE' WHERE id = $id_2

UPDATE ONLY eventsource.events SET status = 'FAIL' WHERE id = $id_3

UPDATE ONLY eventsource.events SET status = 'DONE' WHERE id = $id_n

...

END; -- finish transaction

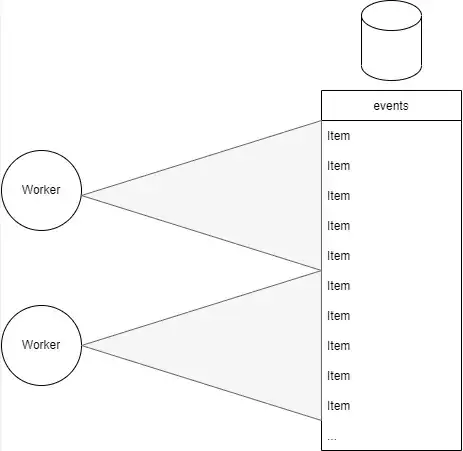

Rough Worker outline

Multiple workers taking batches of work items form the queue then actioning them and reporting their statuses. I want to have as little overlap as possible.

Assessment

When looking at the execution plan it looks like the query has to traverse the entire table to get the records that are in 'PENDING' status.

I thought this might be because of the ORDER BY ee.createdOn ASC at first. But after reviewing the execution plan I saw that the query was traversing the entire table searching for the status, and only THEN ordering it.

Attempt

I saw partial indexes and hoped it could reduce the search space of the queries.

CREATE INDEX ON eventsource.events (status)

WHERE status = 'PENDING'

But I think I made it worse ...

Records are being inserted with the 'PENDING' status and then almost immediately changed to 'DONE' (or 'FAIL') as the application is consuming the queue.

I think this might be destroying the index every time and then recreating it from scratch after the update to the status field (probably very expensive).

Question

What is the effect of updating a partial-index's key / predicate (and if significant) how do I effectively filter a big table on a changing key?

Index Approach

Is my index approach sound?

My first thought was Indexes but maybe partitions would be better suited here?

What happens if the partition key gets changed?

Is it just as destructive as destroying the index?

Index type

I know the default index type is a B-Tree, would a HASH index (or other) be better in this situation?

Under the hood, would changing the index key of a HASH index, result in destroying/recreating the index table the same way it does with a B-Tree?

Index creation

I am unsure what the effect is of the partial index's key vs predicate. What is the effective difference in indexing between:

CREATE INDEX ON eventsource.events (status)

WHERE status = 'PENDING'

and

CREATE INDEX ON eventsource.events (createdOn)

WHERE status = 'PENDING'

Here I am using createdOn because it is in my scrape query but I think id would work too.

Would moving the index key to a different field effect the index creation/recreation? In this instance I moved it from the

statusfield (which will change) to thecreatedOnfield, which won't. I don't quite understand what this SO implies.

And the Postgres docs are a little unclear to me about this type of partial index.