I have a query that hits a 1.4 billion row table.

Everything runs fine with this query, runs sub-second.

Then once every 5 days or so, auto-update stats kicks in and the query starts running almost 60 seconds - It takes on a different plan.

I checked and when the auto-update stats kicks in, the sample rate is less than 1% of the table.

Now, my question is - Can an auto-update stats cause the optimizer to choose a plan that is worse than before?

I can see that the estimations of the bad plan are wildly different to the good plan.

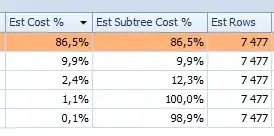

Good Plan:

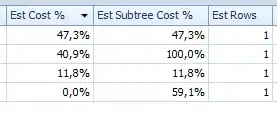

Bad Plan:

The estimations are clearly way off for the bad plan and yet the amount of rows being returned by the query is the same.

At this point, I am trying to confirm whether an auto-stats updates can do this?

What I am attempting now is to do a stats update with a sample of 10% and see what happens.

Update: I have completed a stats update with 10% sampling rate and queries are doing well again.

Have made this a job to run regularly.