My organization is planning to implement a high-availability PostgreSQL cluster using Patroni and etcd. However, we only have two data center sites available, which makes deploying a standard 3-node etcd cluster across separate failure domains challenging.

We understand that running only 2-node etcd cluster increases the risk of split-brain or unavailability if one site becomes unreachable, due to the lack of a quorum.

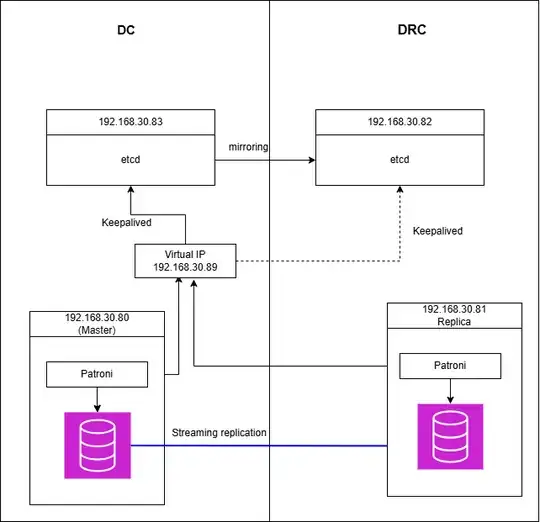

to address this, we come with the following topology:

DC (Primary Site):

192.168.30.80: PostgreSQL node running Patroni (initial master)

192.168.30.83: etcd node

DRC (Disaster Recovery Site):

192.168.30.81: PostgreSQL node running Patroni (replica)

192.168.30.82: backup etcd node

each site runs a single-node etcd cluster, we have tested that failover still works in this setup, we use etcd mirror maker feature to continuously relay key creates and updates to a separate cluster in the DRC. We then use keepalived to manage a floating IP between the etcd clusters, which is used by Patroni on both nodes to access etcd.

My questions are:

What are the risks are involved in running this kind of setup?

Would it be better to add a lightweight third etcd node in separate site (e.g., the cloud) to form a proper quorum?