One reason is that the new Cardinality Estimation (CE) framework can use a different method ('coarse alignment') from the original CE (step alignment with interpolation) to estimate join selectivity when histograms are available.

Quoting from my article,

Join Cardinality Estimation using Histogram Coarse Alignment:

The primary changes made to Cardinality Estimation starting with the SQL Server 2014 release are described in the Microsoft White Paper Optimizing Your Query Plans with the SQL Server 2014 Cardinality Estimator by Joe Sack, Yi Fang, and Vassilis Papadimos.

One of those changes concerns the estimation of simple joins with a single equality or inequality predicate using statistics histograms. In the section headed, “Join Estimate Algorithm Changes”, the paper introduced the concept of “coarse alignment” using minimum and maximum histogram boundaries:

For joins with a single equality or inequality predicate, the legacy CE joins the histograms on the join columns by aligning the two histograms step-by-step using linear interpolation. This method could result in inconsistent cardinality estimates. Therefore, the new CE now uses a simpler join estimate algorithm that aligns histograms using only minimum and maximum histogram boundaries.

Although potentially less consistent, the legacy CE may produce slightly better simple-join condition estimates because of the step-by-step histogram alignment. The new CE uses a coarse alignment. However, the difference in estimates may be small enough that it will be less likely to cause a plan quality issue.

You can find more details and a worked example in my article.

Whether coarse alignment can be used depends only on the CE model selected. There is no query hint to affect this in isolation. Undocumented (and unsupported) trace flag 9474 can be used to disable coarse alignment for the curious.

All that said, the new CE was a complete reworking. In all but the simplest cases, you are unlikely to be able to replicate the detailed behaviours of the original CE. There are simply too many different details (e.g. overpopulated primary key scaling).

The new CE was supposed to be simpler and more easily extensible, but this means several useful features from the original CE (developed and refined over a long period of time) are either not present or are replaced with different simplifications. Very few of these are documented.

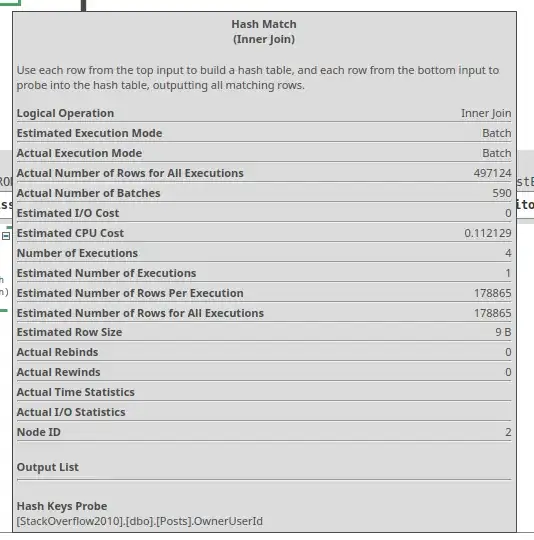

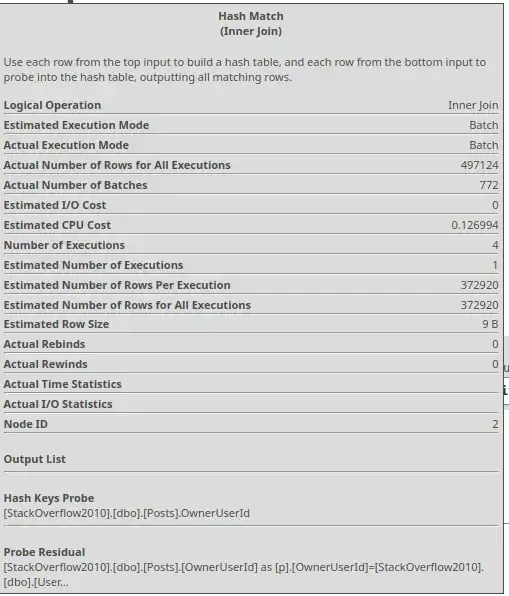

For example, your query contains two join predicates referencing u.Id. The optimizer infers this means p.LastEditorUserId = p.OwnerUserId, which results in a fixed 10% guess in the new CE:

Input tree:

LogOp_Select

CStCollBaseTable(ID=2, CARD=3.7292e+06 TBL: dbo.Posts AS TBL: p)

ScaOp_Comp x_cmpEq

ScaOp_Identifier QCOL: [p].LastEditorUserId

ScaOp_Identifier QCOL: [p].OwnerUserId

Plan for computation:

CSelCalcFixedFilter (0.1)

Selectivity: 0.1

You should not have a mental model of simple and straightforward calculations for either CE model. That simply doesn't capture the reality.

Related Q & A: