In SQL Server at least (can't speak for the other RDBMS you've mentioned, sorry), a single statement will scale better than multiple, to a point. You can test this yourself using your exact statements and data, of course; nobody here can test that for you with your specifics, and your specifics may tilt things one way or another. "Which is faster, x or y?" questions are generally discouraged here because you can test them in your own environment much faster than any of us could throw guesses and logic at you. Especially when you're trying to get an answer for all of the database platforms you've listed - nobody is an expert in all of those and any such answer would either be very biased (like this one) or far too blanket to be useful.

In general, though, the overhead of preparing individual statements (and possibly sending them separately depending on your code and the behavior of your provider) should add up, much like @mustaccio said:

If you want to send three letters, would you go to the post office once carrying three letters, or three times carrying one letter?

This would especially be true in your scenario if each small statement is broken up across individual packets and even different connections. Again, I have no idea how your provider works or how your code sends these statements - if it's a single batch of a variable number of statements, that can actually be harder for SQL Server to optimize than individual single-statement batches, since SQL Server optimizes at the batch level.

Note that the VALUES() clause has an arbitrary limit of 1,000 values, so you may need to create multiple statements depending on how many values you have. The reason is concern over compile time, as Paul White explains here. Also note: Oracle has the same restriction.

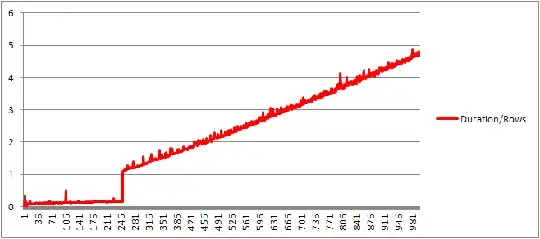

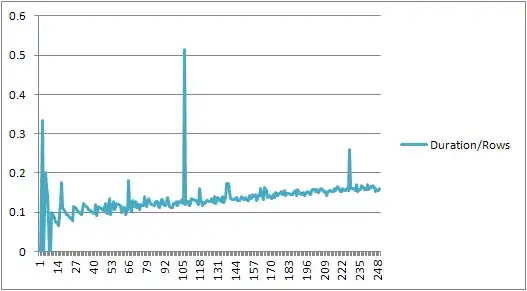

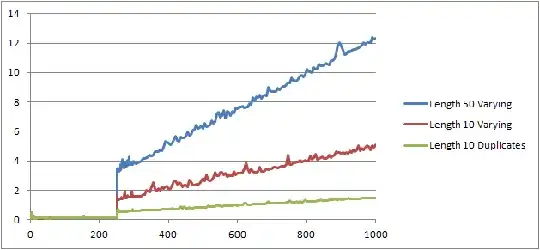

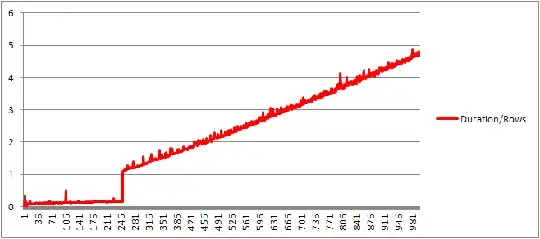

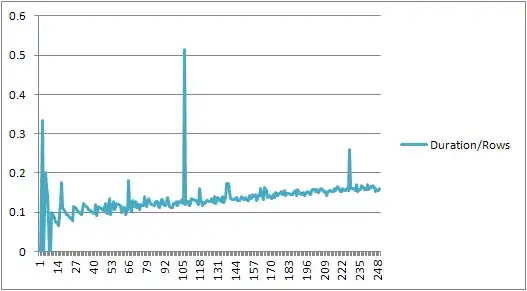

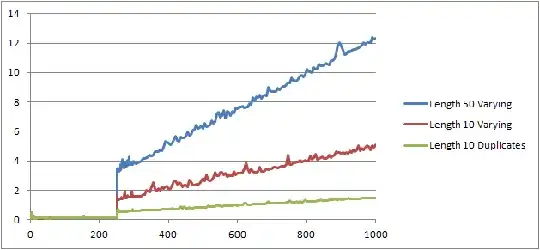

According to Martin Smith's testing, compile time at least is minimal and relatively invariable up to a little over 250 values. See these charts (for details please see his answer):

If you go with a VALUES() clause be aware that each variation (meaning number of actual value sets) will generate its own plan, regardless of whether you use properly parameterized statements or just inline constants, and this is true even when the database's parameterization setting is set to simple. So you may want to consider using the optimize for ad hoc workloads server setting (lots of info here and here to prevent single-use variations from filling the plan cache (generally a good idea for most systems anyway, unless you are CPU-bound and compilation costs are proven to be excessive).

A better answer to this problem is to use table-valued parameters (TVPs), which allow you to send sets of structured data through a single parameter, giving you an efficient way to pass data and a single plan that can be reused regardless of the number of values passed. The catch here is I'm not sure Java understands what those are (in C# you can send, say, a DataTable as a Structured parameter).