I am having MySQL database with all my tables as InnoDB with file_per_table config on. But Still I m seeing huge ibdata file ( ~50GB). All tables are having .ibd file as well. This is a machine I have created by taking dump and loading. What is a reason for this.

2 Answers

You probably have lots of large transactions or one really big transaction.

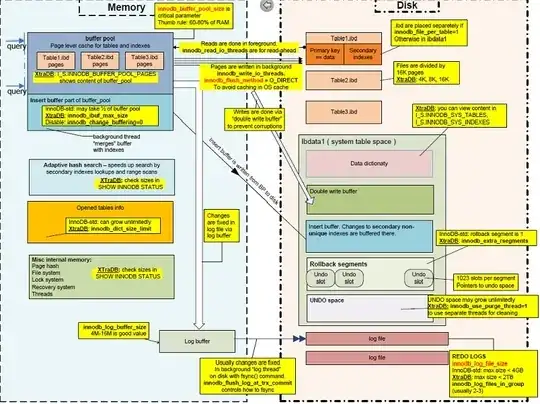

Take a look at Vadim Tkachenko's Pictorial Representation of InnoDB

If you look inside the system table (ibdata1), you see rollback segments and undo logs.

When a rollback segment rolls back a transaction, it has to use the MVCC information it stockpiled in the undo space. That's where most of the growth occurs. Percona mentioned this back on Jun 10, 2010 (Reasons for run-away main Innodb Tablespace)

I have discussed this before

Apr 23, 2013: How can Innodb ibdata1 file grows by 5X even with innodb_file_per_table set?Mar 31, 2014: mysql directory grow to 246G after one query, which failed due to table is fullJun 16, 2014: MySQL Index creation failing on table is full

UPDATE 2015-03-22 15:00 EDT

If you are convinced the data dictionary is the cause, there is only one sure way you can verify this.

- STEP 01) Get another DB Server (Dev or Test)

- STEP 02) Install MySQL on it

- STEP 03) mysqldump the schema-only (using

--no-data) - STEP 04) load the schema-only dump into the empty database

- STEP 05) run

ls -l /var/lib/mysql/ibdata1 | awk '{print $5}'

The number that comes out will be the size will be the size of ibdata1 with just data dictionary entries for the current list of tables, but with no data in them.

In your last comment, you said

So open transaction doesnot seem to be right reason. We use to create some 200-300 table on daily basis (by our ETL tool) and drop them after some processing periodically ( drop some 2k-5k tables once in 2-3weeks), could this attribute to the big file ?

You could be right for same reason: Just like rollback segments and the undo space have no provision for reclaiming unused diskspace for the OS, dropping tables and creating new ones would likewise have no provision for reclaiming unused diskspace for the OS.

To prove that, you would have to use that new server with all tables but no data. Then, perform the same dropping and creating of tables every week. If the ibdata1 grows just for doing that, then you have proven undeniably that dropping and creating tables alone can grow ibdata1 with innodb_file_per_table enabled.

If you want to cleanup ibdata1, please see my posts

Sep 26, 2012: How do I shrink the innodb file ibdata1 without dumping all databases? (on why you have to dump all data, remove ibdatat1, and create a new ibdata1)Oct 29, 2010: Howto: Clean a mysql InnoDB storage engine? (on how to actually do this)

GIVE IT A TRY!!!

- 185,223

- 33

- 326

- 536

I wish add something to the answer of Rolando. From Mysql 5.6 it is possible to put out undo logs away from ib_data1. I have tried the following experiment.

On a fresh installation I have put undo log out of system tablespace (ibdata1) and created a lot of tables to check how ibdata1 grows.

Then I created a big table and opened a transaction in one session. Into another session I raised an update, so I checked how undo tablespace grows in this case.

Creating a lot of tables

The manual page is Storing InnoDB Undo Logs in Separate Tablespaces.

First I added to .cnf the following lines:

innodb-undo-tablespaces=2

Recreated the datadir and checked the size of system and undo tablespaces:

$ mysql_instal_db --basedir=... --datadir --defaults-file=...

$ cd data

$ du -h undo00* ib*

10M undo001

10M undo002

12M ibdata1

48M ib_logfile0

48M ib_logfile1

Fresh ibdata1 (with my configuration) is around 12M. I launched a simple script to create 200000 tables like this:

create table t_<xxx> (i int unsigned auto_increment, c char, d datetime, primary key(i) ) engine=innodb;

After the tables creation the tablespaces sizes are:

$ du -h undo00* ib*

10M undo001

10M undo002

141M ibdata1

48M ib_logfile0

48M ib_logfile1

The 200000 tables occupy less than 129M. Next I dropped tables and recreate them. No difference to the size of ibdata1.

Playing with the undo tablespaces

To continue my experiment I created a table sbtest1 with 4000000 rows using sysbench:

$ sysbench --test=<path>/update_index.lua --mysql-host=... --mysql-port=... --mysql-user=... --mysql-password=... --oltp-table-size=4000000 prepare

$ du -h sbtest1.ibd

957M sbtest1.ibd

I started a transaction in session 1:

session1> start transaction

...

session1> select * from sbtest limit 1;

select * from sbtest1 limit 1;

+----+---------+-----------------------+----------------------------+

| id | k | c | pad |

+----+---------+-----------------------+----------------------------+

| 1 | 2006885 | 08566691963-88624.... | 63188288836-92351140030... |

+----+---------+-----------------------+----------------------------+

1 row in set (0.00 sec)

Sizes don't changed:

$ du -h ibdata1 undo00* ib_logfile*

141M ibdata1

49M undo001

10M undo002

48M ib_logfile0

48M ib_logfile1

I started another session:

session2> use information_schema;

...

session2> select trx_id, trx_rows_locked, trx_isolation_level from INNODB_TRX;

+---------+-----------------+---------------------+

| trx_id | trx_rows_locked | trx_isolation_level |

+---------+-----------------+---------------------+

| 3714243 | 0 | REPEATABLE READ |

+---------+-----------------+---------------------+

1 row in set (0.00 sec)

session2> use sbtest;

...

session2> update sbtest1 set k = k + 1;

Query OK, 4000000 rows affected (3 min 40.75 sec)

Rows matched: 4000000 Changed: 4000000 Warnings: 0

If I check size of ibdata1:

$ du -h ibdata1 undo00* ib_logfile*

141M ibdata1

209M undo001

10M undo002

48M ib_logfile0

48M ib_logfile1

If I commit transaction on session1, undo001 doesn't shrink.

Conclusion

A open transaction could grow the ibdata1 file more than 200000 tables. I know that it is a very simple test and it can be very different by a production workload.

Interesting into mysql 5.7 there is the possibilitliy to truncate undo tablespace, see manual: Truncating Undo Logs That Reside in Undo Tablespaces

Hope it help.

- 872

- 5

- 14