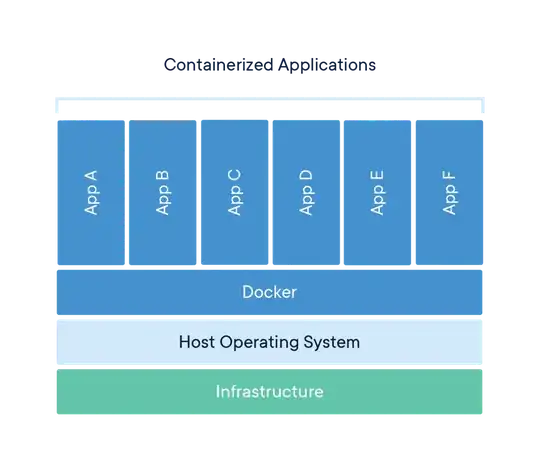

I'm still fairly new to the Docker game, too, but I've got a few little projects that I do exactly what you are asking about. Through my own experience with trial & error (mostly error...) I would suggest using a docker-compose.yml with your network set to static internal IPs so each container will have predictable, repeatable results and IPs that you can configure your containers to communicate across.

I'm not familiar with BaseX but I have a sample situation using docker-compose version: "3.7" here that could easily run multiple apps and/or be added to or repurposed to fit your hypothetical.

This example docker-compose has the following:

A separate container for populating my app-volume volume with the contents of a public GitHub repo.

An Apache Web Server container with PHP-FPM enabled for a "selective backend" that gets built from a Dockerfile in the ~/dockerbuild directory.

An nginx web server container used as a reverse proxy for all things PHP to be passed to Apache (but serves & caches pretty much everything else).

A MariaDB container in the mix for the app DB that I populate with a mysqldump file after the app gets built.

This is a slimmed down version of my docker-compose.yml, so note that you can add a variety of things like healthcheck: and command: to fit your needs.

You execute with docker-compose up -d --build to both build and start your app in one command (but you'll probably want to create your own ba(.sh) file that you execute afterwards, too for those important finishing touches).

Take notice of static-network. And while I am using named volumes in the example, that's just a preference of mine.

version: "3.7"

services:

github_repo_clone:

image: debian:latest

container_name: github_repo_clone

networks:

static-network:

ipv4_address: 172.20.0.254

command: bash -c "

apt-get update &&

apt-get install git -y &&

rm -rf examplerepo &&

git clone https://github.com/exampleuser/examplerepo.git &&

rm -rf /var/www/vhosts/exampleuser/examplerepo &&

mkdir -p /var/www/vhosts/exampleuser/examplerepo &&

cp -rapv examplerepo/* /var/www/vhosts/exampleuser/examplerepo &&

rm -rf examplerepo &&

tail -f /dev/null

"

volumes:

- app-volume:/var/www/vhosts

apache_php-fpm_backend:

container_name: debian-build-demo0

build: ~/dockerbuild

ports:

- "8080:8080"

networks:

static-network:

ipv4_address: 172.20.0.2

volumes:

- app-volume:/var/www/vhosts

nginx_rp:

image: nginx:latest

container_name: nginx-build-demo0

ports:

- "80:80"

- "443:443"

networks:

static-network:

ipv4_address: 172.20.0.3

volumes:

- app-volume:/var/www/vhosts

mariadb:

restart: always

image: mariadb:latest

container_name: mariadb-build-demo0

ports:

- "3306:3306"

networks:

static-network:

ipv4_address: 172.20.0.10

environment:

- MYSQL_ROOT_PASSWORD=4007p@$$w04d

- MYSQL_USER=dbusernamegoeshere

- MYSQL_PASSWORD=@p@$$w04d

- MYSQL_DATABASE=dbnamegoeshere

volumes:

- app-volume:/var/www/vhosts

healthcheck:

test: ["CMD", "mysqladmin" ,"ping", "-h", "localhost"]

timeout: 10s

retries: 5

volumes:

app-volume:

external: true

networks:

static-network:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.20.0.0/16

My clean up command when I take the app down is kind of ugly, but not terribly difficult to read and goes something like this:

docker-compose down -v ; docker rmi -f dockerbuild_apache_php-fpm_backend:latest mariadb:latest nginx:latest debian:latest ; docker rmi -f $(docker images -q --filter "dangling=true") ; docker rmi -f $(docker images -q --filter label=stage=intermediate) ; docker volume prune -f;

I think each "independent app" could (and probably should) have its own docker-compose and/or Dockerfiles, but you may find synergies with certain Dockerfiles that you can create custom command: instructions for (such as the git_repo_clone example) to be used & shared across multiple "apps".