I have been gradually integrating Prometheus into my monitoring workflows, in order to gather detailed metrics about running infrastructure.

During this, I have noticed that I often run into a peculiar issue: sometimes an exporter that Prometheus is supposed to pull data from becomes unresponsive. Maybe because of a network misconfiguration - it is no longer accessible - or just because the exporter crashed.

Whatever the reason it may be, I find that some of the data I expect to see in Prometheus is missing and there is nothing in the series for a certain time period. Sometimes, one exporter failing (timing out?) also seems to cause others to fail (first timeout pushed entire job above top-level timeout? just speculating).

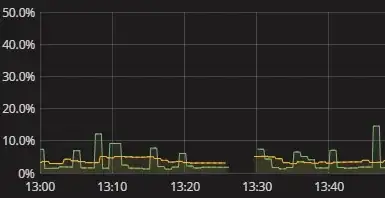

All I see is a gap in the series, like shown in the above visualization. There is nothing in the log when this happens. Prometheus self-metrics also seem fairly barren. I have just had to resort to manually trying to replicate what Prometheus is doing and seeing where it breaks. This is irksome. There must be a better way! While I do not need realtime alerts, I at least want to be able to see that an exporter failed to deliver data. Even a boolean "hey check your data" flag would be a start.

How do I obtain meaningful information about Prometheus failing to obtain data from exporters? How do I understand why gaps exist without having to perform a manual simulation of Prometheus data gathering? What are the sensible practices in this regard, perhaps even when extended to monitoring data collections in general, beyond Prometheus?