I am not sure if this question is related to this community. But nevertheless. I work on CVT engines, I had a new idea of modifying my controller during a slope or a gradient on the road so that the Vehicle speed does not take a hit. I have a radar attached on the vehicle, which gives me information about an upcoming slope. I.e., at what distance is the slope (meters) and percentage of the slope (100% being a 90deg wall).

Before I continue, I need to mention that this entire discussion is in the Cruise Control mode. That is, the controller maintains the vehicle speed irrespective of the engine RPM.

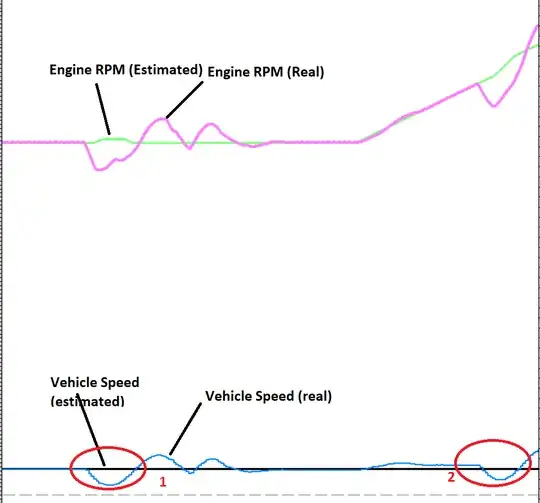

The first approach I did was slowly increasing the engine RPM when the slope approaches so the vehicle speed does not take a hit. Below is the simulation I have done.

1) The red 1 is where I simulate 5% slope and the speed drops by around 10%.

2) The red 2 is where I simulate 5% slope again but before the slope, I slowly increase the Engine RPM so the vehicle speed drops only by 4%.

From this, I do conclude that increasing the Engine rpm is a good method. But I do it without any proper math behind it (how fast or how slowly I should do it). I want to implement it with a proper logic and math included. If anyone can help me with the proper math or guide me in a proper direction, I'd be grateful