The pods in my application scale with 1 pod per user (each user gets their own pod). I have the limits for the application container set up like so:

resources:

limits:

cpu: 250m

memory: 768Mi

requests:

cpu: 100m

memory: 512Mi

The nodes in my nodepool have 8GB of memory each. I started up a bunch of user instances to begin testing, and watched my resource metrics go up as I started each one:

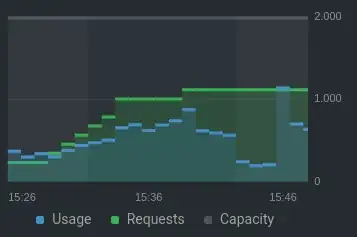

CPU:

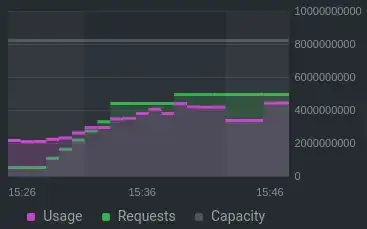

Memory:

At 15:40, I saw the event logs show this error (note: the first node is excluded using a taint):

0/2 nodes are available: 1 Insufficient memory, 1 node(s) didn't match node selector.

Why did this happen when the memory/cpu requests were still well below the total capacity (~50% for cpu, ~60% mem)?

Here is some relevant info from kubectl describe node:

Non-terminated Pods: (12 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

ide theia-deployment--ac031811--football-6b6d54ddbb-txsd4 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 13m

ide theia-deployment--ac031811--footballteam-6fb7b68794-cv4c9 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 12m

ide theia-deployment--ac031811--how-to-play-football-669ddf7c8cjrzl 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 14m

ide theia-deployment--ac031811--packkide-7bff98d8b6-5twkf 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 9m54s

ide theia-deployment--ac032611--static-website-8569dd795d-ljsdr 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 16m

ide theia-deployment--aj090111--spiderboy-6867b46c7d-ntnsb 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 2m36s

ide theia-deployment--ar041311--tower-defenders-cf8c5dd58-tl4j9 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 14m

ide theia-deployment--np091707--my-friends-suck-at-coding-fd48ljs7z 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 4m14s

ide theia-deployment--np091707--topgaming-76b98dbd94-fgdz6 110m (5%) 350m (18%) 528Mi (9%) 832Mi (15%) 5m17s

kube-system csi-azurefile-node-nhbpg 30m (1%) 400m (21%) 60Mi (1%) 400Mi (7%) 12d

kube-system kube-proxy-knq65 100m (5%) 0 (0%) 0 (0%) 0 (0%) 12d

lens-metrics node-exporter-57zp4 10m (0%) 200m (10%) 24Mi (0%) 100Mi (1%) 6d20h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

cpu 1130m (59%) 3750m (197%)

memory 4836Mi (90%) 7988Mi (148%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

attachable-volumes-azure-disk 0 0