My goal is to implement 2 nodes HTTP load-balancer, using virtual IP (VIP). For this task I picked pacemaker (virtual IP switching) and Caddy for HTTP load-balancer. Load-balancer choice is not a point of this question. :)

My requirement is simple - I want virtual IP to be assigned to the host where a healthy and working Caddy instance is running.

Here is how I implemented it using Pacemaker:

# Disable stonith feature

pcs property set stonith-enabled=false

Ignore quorum policy

pcs property set no-quorum-policy=ignore

Setup virtual IP

pcs resource create ClusterIP ocf:heartbeat:IPaddr2 ip=123.123.123.123

Setup caddy resource, using SystemD provider. By default it runs on one instance at a time, so clone it and cloned one by default runs on all nodes at the same time.

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/6/html/configuring_the_red_hat_high_availability_add-on_with_pacemaker/ch-advancedresource-haar

pcs resource create caddy systemd:caddy clone

Enable constraint, so both VirtualIP assigned and application running on the same node.

pcs constraint colocation add ClusterIP with caddy-clone INFINITY

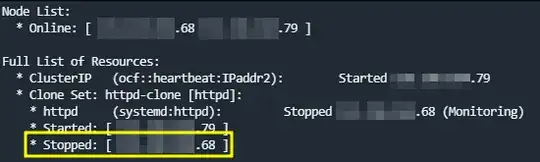

However, if I SSH to the node where Virtual IP is assigned, malform Caddy configuration file and do systemctl restart caddy - after some time pacemaker detects that caddy failed to start and simply puts it into stopped state.

How do I force pacemaker to keep restarting my SystemD resource instead of putting it into stopped state?

On top of that - if I fix configuration file and do systemctl restart caddy, it starts, but pacemaker just further keeps it in stopped state.

And on top of on top of that - if I stop the other node, virtual ip is not assigned anywhere because of below:

# Enable constraint, so both VirtualIP assigned and application running _on the same_ node.

pcs constraint colocation add ClusterIP with caddy-clone INFINITY

Can someone point me to the right direction of what I am doing wrong?