I'm working on a project that can monitor virtual machines' vgpu usage.

The hypervisor is vCenter, we have nvidia A16 cards installed on vCenter hosts, and assigned a16 vGPU to a couple of windows VMs on this host, theses vGPUs are allocated to the same GPU chip.

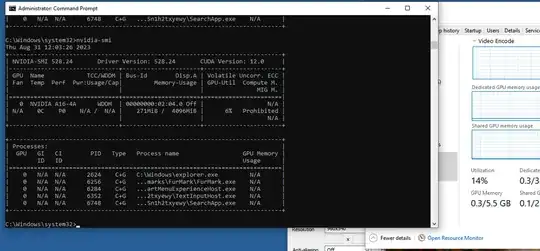

I tried to use nvidia-smi command to retrieve vGPU usage in both the host and VMs.

In host I used nvidia-smi vgpu, and in VMs I used nvdia-smi.

But it turned out the metrics provided by nvidia-smi was always different from what was provided by Windows OS in VM.

For example, the usage from nvidia-smi could be as low as 6%, but the usage from windows task manager was always around 15%.

We prefer to trust the metrics provided by guest OS, as it reflects the real demand of user case.

My question is, what's the meaning and source of nvidia-smi metrics? Why is the result so different? Can I somehow modify the result to reflect the real guest demand?

Thanks for any pointers!