4T requires the use of GPT, and you can't properly both have a whole-disk partitionable RAID and make disks recognizable by BIOS to boot from (strictly impossible with EFI, not so strictly with legacy, but that will require dark magic you don't want to know).

(It's a shame Debian installer even accepted this scheme. It shouldn't, it is strictly invalid and could not possibly work.)

This is because GPT partition table is actually stored both at the beginning and at the end of the device. When whole-disk RAID is used and seen without proper MD metadata interpretation, both GPT copies never happen in place simultaneously:

- for the v1.0 MD superblock the second GPT (at the end) will be missing (moved earlier, into place BIOS doesn't expect);

- for v1.1 and v1.2 MD superblocks the first GPT (in the beginning) will be shifted so firmware won't find it.

Either way, it will not recognize disks as having valid partition table and refuse to boot from them.

In addition to that, if you want to boot with UEFI, you need to know that EFI firmware doesn't have a clue that ESP could be a software RAID (there is nothing about it in the spec). So it must not. ESP must always be a simple GPT partition.

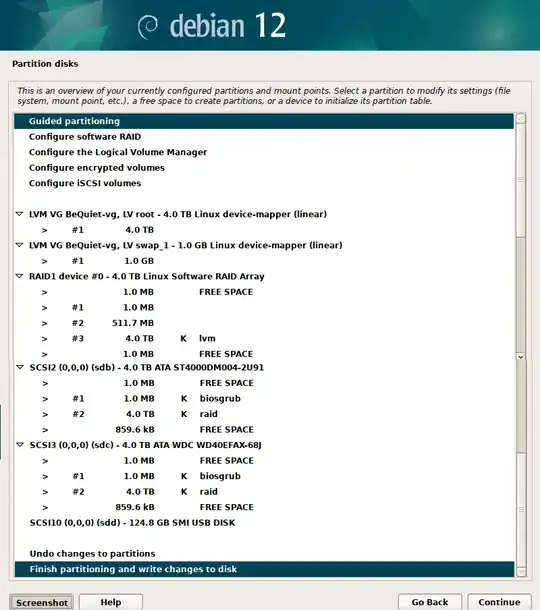

So to resolve this, instead of building RAID and then partitioning, you first partition the disks and then collect some partitions into RAIDs. While this is disputable, I suggest the following scheme:

- for EFI install: a ESP (type 1) of 511MiB (default offset 1MiB), then 512MiB for /boot of type Linux RAID, then the rest for the rest of type Linux RAID (this is type 29 in fdisk if I am not mistaken).

- for legacy install: 1 MiB (type 4 — biosgrub), 510 MiB boot (RAID), and the rest RAID.

Then, you create two RAIDs (/boot and the rest), and select one of the ESPs to be "the ESP". You'll enable the boot from the second disk after install. And then you create LVM on the big RAID, to hold filesystems; there you may create swap volume, root FS volume (30 GiB is enough for Debian and it's easy to enlarge on the fly; notice that you will place all data to other mounted dedicated volumes — it's no good to store application data in the root volume). The rest can be created as needed, during the system lifetime.

Then you install the system as usual. It must create FAT32 on the ESP partition; Debian 11 installer had problems with that so I had to create it manually; I don't know for 12 since I hadn't performed such an install, only upgrades. When it comes to bootloader installation, you just do as it suggests for EFI, while for legacy you may just repeat this step and install it twice, selecting second disk at the second time, so it will be redundant bootable right away.

For EFI, after first system boot, you need to manually create file FAT32 file system on the second "ESP" partition, mount it somewhere (I use /boot/efi2) and copy everything from /boot/efi, retaining the structure. Then you create a second firmware boot entry using efibootmgr, here are the instructions.