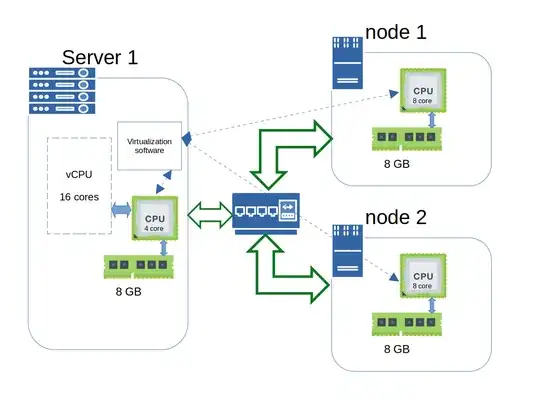

Virtualization experts! I have a question that revolves around a potential CPU virtualization concept illustrated below. Is there an existing implementation of this idea, or are there hardware limitations? Specifically, I'm curious if any of you have encountered CPU virtualization where the software on Server 1 can amalgamate CPU cores from nodes (node 1 & 2) or slave machines to create a vCPU with a significantly higher core count. The goal is for programs running on Server 1 to perceive a larger number of CPU cores (e.g., 16) rather than the actual 4 or 8.

While I'm aware that Big Data may employ a similar approach, it often involves breaking down programs into a map-reduce mechanism. My query is whether there's a mechanism within the virtualization software itself to automatically break down program executables into chunks, distribute them to node CPUs (with data stored in memory), and execute these chunks. This process would ideally be handled by the virtualization software, eliminating the need for manual programming.

I appreciate your insights on this matter. Feel free to share your comments, and if you know of any other relevant groups or discussion forums, please point me in the right direction.