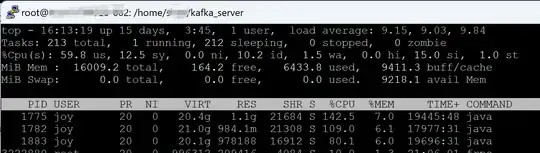

I have a single cloud server, the spec:

Intel Xeon 4 core 3GHz Cpu, 16G memory

I've run a kafka cluster(3 kafka instances + zookeeper) via docker compose on it(docker images were pulled at early 2024) with all latest tag for docker images, one of the kafka node:

kafka0:

#build: .

image: "confluentinc/cp-kafka:latest"

ports:

- "9092:9092"

environment:

#DOCKER_API_VERSION: 1.22

#KAFKA_ADVERTISED_HOST_NAME: kafka0

#KAFKA_ADVERTISED_PORT: 9092

KAFKA_LISTENERS: LISTENER_PUBLIC://0.0.0.0:9092,LISTENER_INTERNAL_DOCKER://kafka0:29092

KAFKA_ADVERTISED_LISTENERS: LISTENER_PUBLIC://aaa.bbb.ccc:9092,LISTENER_INTERNAL_DOCKER://kafka0:29092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LISTENER_PUBLIC:PLAINTEXT,LISTENER_INTERNAL_DOCKER:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: LISTENER_INTERNAL_DOCKER

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_OPTS: -javaagent:/usr/share/jmx_exporter/jmx_prometheus_javaagent-0.19.0.jar=12345:/usr/share/jmx_exporter/kafka-broker.yml

KAFKA_LOG_MESSAGE_TIMESTAMP_TYPE: LogAppendTime

#KAFKA_JMX_OPTS: "-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=124.222.206.102 -Dcom.sun.management.jmxremote.rmi.port=12345"

#JMX_PORT: 12345

KAFKA_HEAP_OPTS: "-Xmx512M -Xms512M"

#KAFKA_CREATE_TOPICS: "broker_start_test:1:1"

KAFKA_LOG_RETENTION_HOURS: 6

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./my_extensions:/usr/share/jmx_exporter/

restart: unless-stopped

The monitoring has been all set (jmx_exporter and node-exporter) for the server, and also the prometheus and grafana.

Now there're over 1.2K clients (99% are produce, and 1% are consume) connected on in, thgouh application functions now are all fine, but i see the system is in a heavy load.

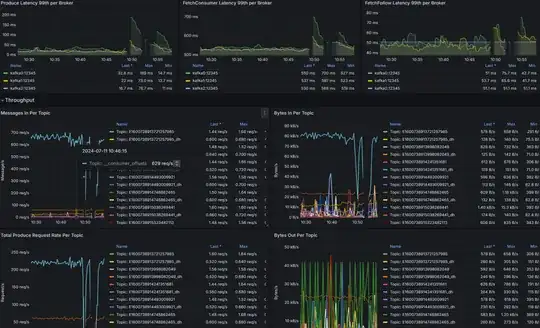

These are the charts I currently have:

node exporter for watch the server host

jmx_exporter for watch the inside of kafka instances overall grafana snapshot

result of vmstat 1 5 in one of docker kafka instance

root@ecs-01796520-002:~# docker exec -it 70314ef51864 bash

[appuser@70314ef51864 ~]$ vmstat 1 5

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

12 0 0 723444 169068 8917012 0 0 60 1535 5 8 50 22 26 2 1

15 0 0 722616 169080 8917808 0 0 4 2760 41451 36304 52 21 24 1 1

8 0 0 721140 169108 8919176 0 0 84 4480 40002 33518 56 20 23 1 0

12 0 0 719684 169108 8920820 0 0 152 8 40462 35086 56 19 25 0 0

8 0 0 716452 169140 8921556 0 0 0 4468 40856 35174 55 22 23 1 0

Questions:

- why under such a small amount of client and data, the cluster behaviors in such high load?

- how to scale for support more clients? like 8K?