I am running a media API on Cloud Run and, while it is running fine, the cost optimization of that service is desastrous because the CPU are very much underutilized for each instances.

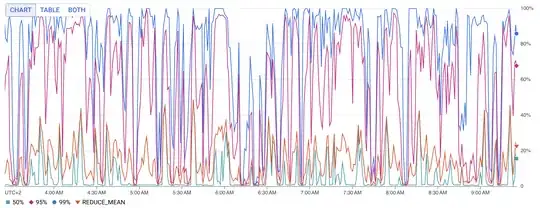

Here's what my CPU utilization chart looks like:

As you can see, while there are some spikes where the CPU is used 100%, in most cases (50% quartile and mean) the CPU is sitting at < 20%.

My API consists of high CPU operations (ffmpeg transcoding) that would take all the CPU ressources available and some low CPU async operations (downloading and uploading videos).

This would explain the graph above: most of the time, the instance is waiting in async task with low CPU usage and sometime spikes to near 100% when processing the video.

The solution to that would be to increase the concurrency of each instance. That wait when one process might be waiting, the other might be processing and vice versa resulting in the CPU being more utiliszed on average. This would also increase the response latency, but this is completely fine for my usecase.

Except when I do try to increase the concurrency, nothing changes. Cloud Run still seems to spin up new instances to handle new requests. My theory is that, because of those CPU spike usage, cloud run consider those instances already at capacity and spins up a new instance to handle the request instead of routing it to an existing one.

This seems like a fairly common use-case yet I havent found any ressources on how to optimize these kinds of tasks.

Any ideas on what I could try ?

The tradeoff I am ok to make:

- latency

- stability (if a instance crash once in a while, i can just retry)