Since this question is not about "code not working", I'm asking my first question here instead of StackOverflow. Inform me if any required information is missing from the question.

Setup:

I have two dictionaries of type Dictionary<int, int> where the keys are a range from 1 to n and the values are the frequencies of those numbers. For example:

var frequenciesA = new Dictionary<int, int>

{

{ 1, 3 },

{ 2, 5 },

{ 3, 4 }

};

var frequenciesB = new Dictionary<int, int>

{

{ 1, 1 },

{ 2, 3 },

{ 3, 4 }

};

Input:

Now I will get a list of integers as an input, in the range of 1 to n, like this:

var numbers = new List<int> { 1, 2, 3, 3, 2, 1, 1, 2, 3, 1, 2, 2 };

I also create a frequency-dictionary from this list with following code:

var frequenciesFromInput = Enumerable.Range(1, 3)

.ToDictionary(x => x,

x => numbers.Count(y => y == x));

This would result in following key-value pairs:

K V

----

1 4

2 5

3 3

Problem:

Suppose I needed to determine to which from the other dictionaries (A or B) the frequencies equals, that'd be easy: take the values of the dictionaries as a list and use the Enumerable.SequenceEqual<T> method.

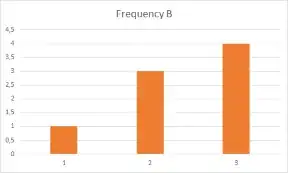

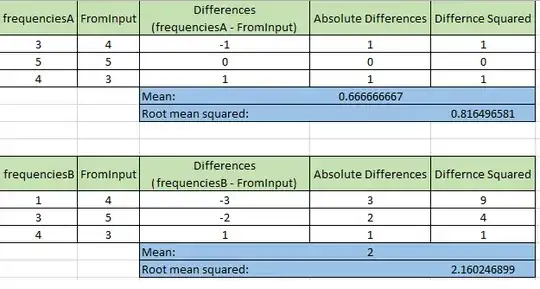

But in my case I need to determine which dictionary (A or B) matches closest to the frequencies of the input dictionary. Visualizing this makes it easier to understand. Here are the charts for the constant frequencies of dictionary A and B:

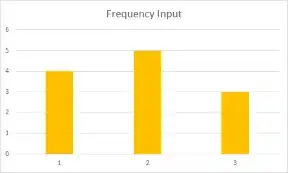

And here's the chart of the input dictionary:

As you can see the frequencies of A match closer than to those of B.

Question:

How would I start creating a method/algorithm to determine which dictionary (A or B) is closer to that of the input dictionary. I'm not asking for a full implementation, just a little push as for now I have no idea where and how to start on this.

Only thing I could think of was some variation on the Knapsack problem, but I'm not sure I'm on the right path there.