Mergesort is a divide and conquer algorithm and is O(log n) because the input is repeatedly halved. But shouldn't it be O(n) because even though the input is halved each loop, each input item needs to be iterated on to do the swapping in each halved array? This is essentially asymptotically O(n) in my mind. If possible please provide examples and explain how to count the operations correctly! I haven't coded anything up yet but I've been looking at algorithms online. I've also attached a gif of what wikipedia is using to visually show how mergesort works.

5 Answers

It's O(n * log(n)), not O(log(n)). As you've accurately surmised, the entire input must be iterated through, and this must occur O(log(n)) times (the input can only be halved O(log(n)) times). n items iterated log(n) times gives O(n log(n)).

It's been proven that no comparison sort can operate faster than this. Only sorts that rely on a special property of the input such as radix sort can beat this complexity. The constant factors of mergesort are typically not that great though so algorithms with worse complexity can often take less time.

- 36,914

The complexity of merge sort is O(nlog(n)) and NOT O(log(n)).

Merge sort is a divide and conquer algorithm. Think of it in terms of 3 steps:

- The divide step computes the midpoint of each of the sub-arrays. Each of this step just takes O(1) time.

- The conquer step recursively sorts two subarrays of n/2 (for even n) elements each.

- The merge step merges n elements which takes O(n) time.

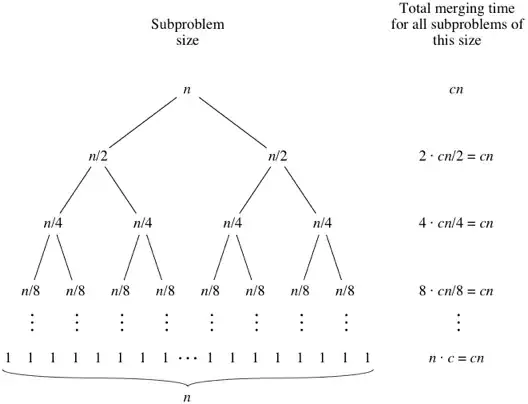

Now, for steps 1 and 3 i.e. between O(1) and O(n), O(n) is higher. Let's consider steps 1 and 3 take O(n) time in total. Say it is cn for some constant c.

How many times are we subdividing the original array?

We subdivide the input until each sub-array has one item so there are exactly log(n) "subdivision stages". We undo each subdivision stage with a "merge stage".

For example, if n = 8, there is a total of 3 merge stages: one where each pair of n/8 sub-arrays are merged to form a single n/4 sub-array, one where pairs of n/4s are merged to form n/2s, and one where the pair of n/2 are merged to form n.

What is the time cost for merging all pairs at each merge stage?

For this, look at the tree below - for each level from top to bottom:

- Level 2 calls merge method on 2 sub-arrays of length n/2 each. The complexity here is 2 * (cn/2) = cn.

- Level 3 calls merge method on 4 sub-arrays of length n/4 each. The complexity here is 4 * (cn/4) = cn.

- and so on ...

At each merge stage, the total cost for merging all pairs is O(cn). Since there are log(n) merge stages, the total complexity is: (cost per stage)*(number of stages) = (cn)*(log(n)) or O(nlog(n)).

Image credits: Khan Academy

- 163

- 801

Merge Sort is a recursive algorithm and time complexity can be expressed as following recurrence relation.

T(n) = 2T(n/2) + ɵ(n)

The above recurrence can be solved either using Recurrence Tree method or Master method. It falls in case II of Master Method and solution of the recurrence is ɵ(n log n).

Time complexity of Merge Sort is ɵ(nLogn) in all 3 cases (worst, average and best) as merge sort always divides the array in two halves and take linear time to merge two halves.

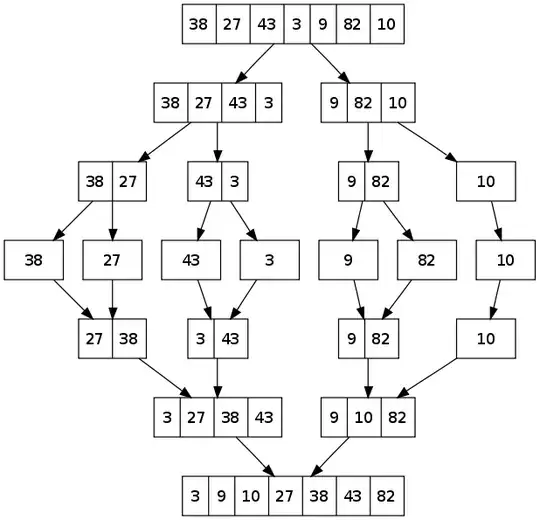

It divides input array in two halves, calls itself for the two halves and then merges the two sorted halves. The merg() function is used for merging two halves. The merge(arr, l, m, r) is key process that assumes that arr[l..m] and arr[m+1..r] are sorted and merges the two sorted sub-arrays into one. See following C implementation for details.

MergeSort(arr[], l, r)

If r > l

1. Find the middle point to divide the array into two halves:

middle m = (l+r)/2

2. Call mergeSort for first half:

Call mergeSort(arr, l, m)

3. Call mergeSort for second half:

Call mergeSort(arr, m+1, r)

4. Merge the two halves sorted in step 2 and 3:

Call merge(arr, l, m, r)

If we take a closer look at the diagram, we can see that the array is recursively divided in two halves till the size becomes 1. Once the size becomes 1, the merge processes comes into action and starts merging arrays back till the complete array is merged.

- 109

Comparison based sort algorithms have a lower bound (n*log(n)), which means it's not possible to have a comparison based sorting algorithm with O(log(n)) time complexity.

By the way, merge sort is O(n*log(n)).

Think it this way.

[ a1,a2, a3,a4, a5,a6, a7,a8 .... an-3,an-2, an-1, an ]

\ / \ / \ / \ / \ / \ /

a1' a3' a5' a7' an-3' an-1'

\ / \ / \ /

a1'' a5'' an-3''

\ / /

a1''' /

\

a1''''

This looks a reversed binary tree.

Let the input size be n.

Each a_n represents a list of elements. First line's a_n have only one element.

At each level, the sum of the merge cost on average is n(there exists corner cases which the cost is lower[1]). And the tree's height is log_2(n).

So, the time complexity of merge sort is O(n*log_2(n)).

[1] if sorting on a list that is already sorted, which is called the best case. the cost reduced to

n/2 + n/4 + n/8 + .... + 1 = 2^log_2(n) -1 ~ O(n). (assume the lengthnis power of two)

- 11

Sorting is a NP-Complete problem in computer science (Non Polynomial problem). This means that, unless mathematically proven, you can't go below O(n log n) when sorting an list of elements.

Check this article in Wikipedia (https://en.wikipedia.org/wiki/P_versus_NP_problem)

Basically so far nobody managed to prove that (P == NP) and if you do, you first become millionaire, second you start world war III due to the fact that you will be able to break all the pub/private key security mechanisms used everywhere nowadays :)

- 95