summary: Finding and exploiting the (instruction-level) parallelism in a single-threaded program is done purely in hardware, by the CPU core it's running on. And only over a window of a couple hundred instructions, not large-scale reordering.

Single-threaded programs get no benefit from multi-core CPUs, except that other things can run on the other cores instead of taking time away from the single-threaded task.

the OS organizes the instructions of all threads in such a way that they are not waiting on each other.

The OS does NOT look inside the instruction streams of threads. It only schedules threads to cores.

Actually, each core runs the OS's scheduler function when it needs to figure out what to do next. Scheduling is a distributed algorithm. To better understand multi-core machines, think of each core as running the kernel separately. Just like a multi-threaded program, the kernel is written so that its code on one core can safely interact with its code on other cores to update shared data structures (like the list of threads that are ready to run.

Anyway, the OS is involved in helping multi-threaded processes exploit thread-level parallelism which must be explicitly exposed by manually writing a multi-threaded program. (Or by an auto-parallelizing compiler with OpenMP or something).

Then the front-end of the CPU further organizes those instructions by distributing one thread to each core, and distributes independent instructions from each thread among any open cycles.

A CPU core is only running one stream of instructions, if it isn't halted (asleep until the next interrupt, e.g. timer interrupt). Often that's a thread, but it could also be a kernel interrupt handler, or miscellaneous kernel code if the kernel decided to do something other than just return to the previous thread after handling and interrupt or system call.

With HyperThreading or other SMT designs, a physical CPU core acts like multiple "logical" cores. The only difference from an OS perspective between a quad-core-with-hyperthreading (4c8t) CPU and a plain 8-core machine (8c8t) is that an HT-aware OS will try to schedule threads to separate physical cores so they don't compete with each other. An OS that didn't know about hyperthreading would just see 8 cores (unless you disable HT in the BIOS, then it would only detect 4).

The term "front-end" refers to the part of a CPU core that fetches machine code, decodes the instructions, and issues them into the out-of-order part of the core. Each core has its own front-end, and it's part of the core as a whole. Instructions it fetches are what the CPU is currently running.

Inside the out-of-order part of the core, instructions (or uops) are dispatched to execution ports when their input operands are ready and there's a free execution port. This doesn't have to happen in program order, so this is how an OOO CPU can exploit the instruction-level parallelism within a single thread.

If you replace "core" with "execution unit" in your idea, you're close to correct. Yes, the CPU does distribute independent instructions/uops to execution units in parallel. (But there's a terminology mix-up, since you said "front-end" when really it's the CPU's instruction-scheduler aka Reservation Station that picks instructions ready to execute).

Out-of-order execution can only find ILP on a very local level, only up to a couple hundred instructions, not between two independent loops (unless they're short).

For example, the asm equivalent of this

int i=0,j=0;

do {

i++;

j++;

} while(42);

will run about as fast as the same loop only incrementing one counter on Intel Haswell. i++ only depends on the previous value of i, while j++ only depends on the previous value of j, so the two dependency chains can run in parallel without breaking the illusion of everything being executed in program order.

On x86, the loop would look something like this:

top_of_loop:

inc eax

inc edx

jmp .loop

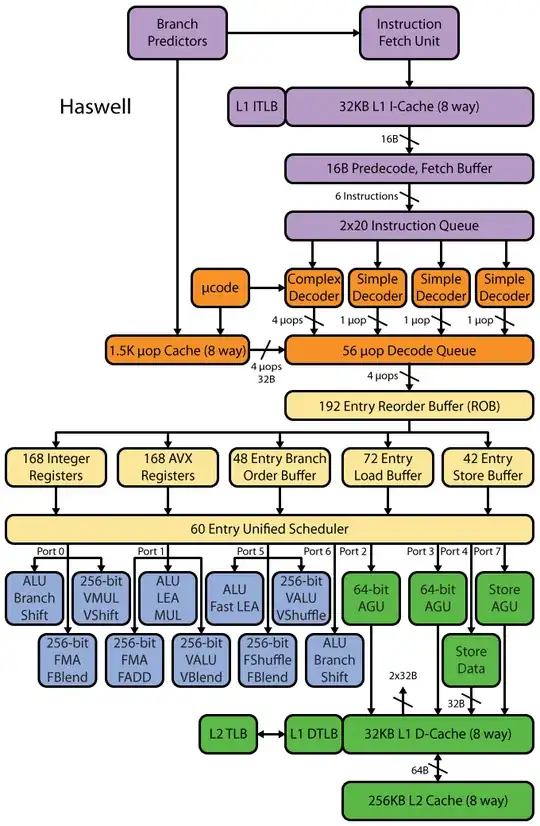

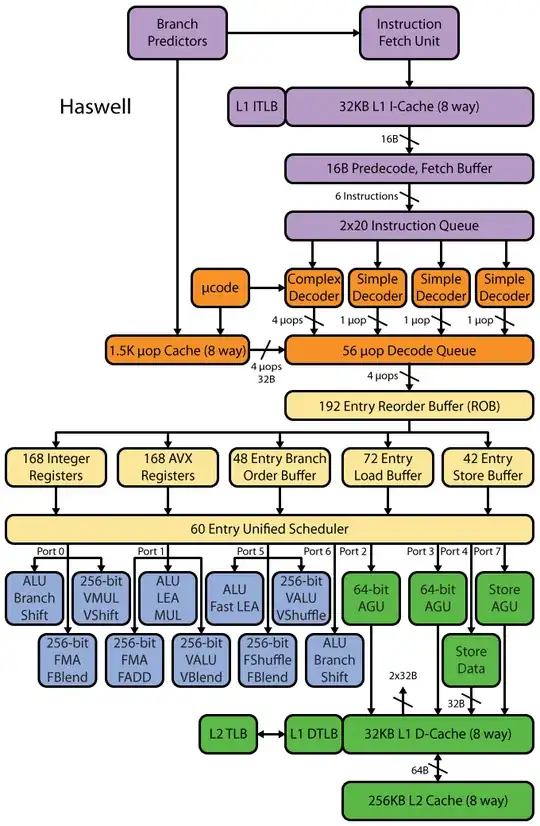

Haswell has 4 integer execution ports, and all of them have adder units, so it can sustain a throughput of up to 4 inc instructions per clock if they're all independent. (With latency=1, so you only need 4 registers to max out the throughput by keeping 4 inc instructions in flight. Contrast this with vector-FP MUL or FMA: latency=5 throughput=0.5 needs 10 vector accumulators to keep 10 FMAs in flight to max out the throughput. And each vector can be 256b, holding 8 single-precision floats).

The taken-branch is also a bottleneck: a loop always takes at least one whole clock per iteration, because taken-branch throughput is limited to 1 per clock. I could put one more instruction inside the loop without reducing performance, unless it also reads/writes eax or edx in which case it would lengthen that dependency chain. Putting 2 more instructions in the loop (or one complex multi-uop instruction) would create a bottleneck on the front-end, since it can only issue 4 uops per clock into the out-of-order core. (See this SO Q&A for some details on what happens for loops that aren't a multiple of 4 uops: the loop-buffer and uop cache make things interesting.)

In more complex cases, finding the parallelism requires looking at a larger window of instructions. (e.g. maybe there's a sequence of 10 instructions that all depend on each other, then some independent ones).

The Re-Order Buffer capacity is one of the factors that limits the out-of-order window size. On Intel Haswell, it's 192 uops. (And you can even measure it experimentally, along with register-renaming capacity (register-file size).) Low-power CPU cores like ARM have much smaller ROB sizes, if they do out-of-order execution at all.

Also note that CPUs need to be pipelined, as well as out-of-order. So it has to fetch&decode instructions well ahead of the ones being executed, preferably with enough throughput to refill buffers after missing any fetch cycles. Branches are tricky, because we don't know where to even fetch from if we don't know which way a branch went. This is why branch-prediction is so important. (And why modern CPUs use speculative execution: they guess which way a branch will go and start fetching/decoding/executing that instruction stream. When a misprediction is detected, they roll back to the last known-good state and execute from there.)

If you want to read more about CPU internals, there are some links in the Stackoverflow x86 tag wiki, including to Agner Fog's microarch guide, and to David Kanter's detailed writeups with diagrams of Intel and AMD CPUs. From his Intel Haswell microarchitecture writeup, this is the final diagram of the whole pipeline of a Haswell core (not the whole chip).

This is a block diagram of a single CPU core. A quad-core CPU has 4 of these on a chip, each with their own L1/L2 caches (sharing an L3 cache, memory controllers, and PCIe connections to the system devices).

I know this is overwhelmingly complicated. Kanter's article also shows parts of this to talk about the frontend separately from the execution units or the caches, for example.