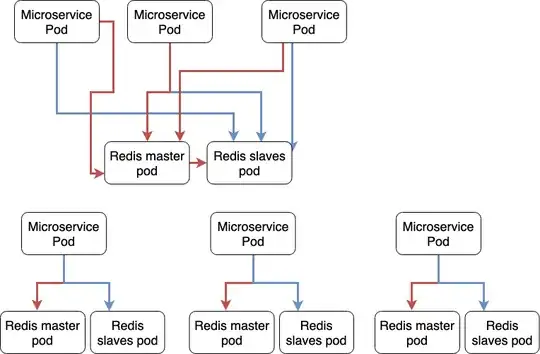

If we consider the cache to be orthogonal to the architecture (and it's), the first pic is ok.

For the same reason that we don't deploy one security service, one API gateway, one message broker or one service locator per POD we don't have to deploy one Cache (in replica-set) per POD.1,2

Be aware of premature optimizations

Caches are meant to solve specific performance issues. Mainly those derived from costly IPCs or heavy calculations. Without evidence of any of these issues, deploying caches (in replica-set) per POD for the sake of the "MS' God", is premature optimization (among other things).

The Cloud can kill you.

If our goal is to deploy the architecture in the cloud, the smart move would be to start small. Be conservative. Scaling up|out as the needs come because the contrary is oversizing the architecture. Oversized architectures are potentially dangerous in the cloud because they can, literally, kill the project devouring the ROI in no time. 3

Size the solution according to the problem

Perform load tests first, get metrics and shreds of evidence of performance issues, find out whether caches are the solution to these issues. Then, size the solution according to the problem. If and only if services are proven to need a dedicated cache, deploy them. By the time you do it, you do it uppon objective metrics and keeping the bills under control.

I was told once

If the solution is more complex or more expensive than the problem it

solves, then it's not a profitable solution. It's not even a solution!

Emphasis mine

Keep complexity at bay

MS architectures are complex per se, they don't need us adding more complexity for the sake of dogmas or beliefs we barely understand. Keep the overall complexity of the system as lower as possible but not lower. In other words, keep it simple but not at the cost of defeating the purpose of the architecture (total freedom of deployment and SDLC).

1: I'm assuming that every POD is a replica of the same Service, not different services.

2: MS architectures are not about many but small systems, it's about a single system composed by business "capabilities", working all together for a greater good.

3: Cloud is anything but cheap. Particularly, when it comes to buy RAM