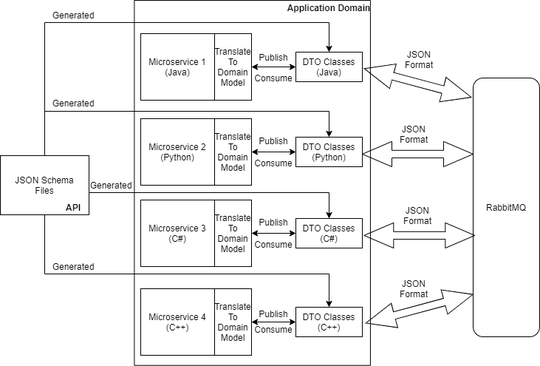

Currently, our architecture uses an "API-first" approach in building our product. This product is divided across multiple teams handling different microservices.

The above image succinctly shows our approach. There are JSON Schema files that are shared between all microservices. These JSON schema files are used to auto-generate DTO classes for every microservices. These JSON schema files are contracts between the services. RabbitMQ is the backend for all the microservices. The message is published or consumed using these DTO classes. The classes are serialized into JSON format and sent across RabbitMQ. While receiving, the JSON format is deserialized into DTO classes.

Since the underlining domain model does not directly map to the JSON Schema, there is a translation layer, which extracts relevant properties from DTO classes and fills the domain model for processing the business logic.

The problem we are facing currently is, if there is a change in JSON schema files, all the services using the files, along with the translation layer in the respective services need to be adapted to imbibe the change. Also, the translation layer is growing with every change to the JSON schema.

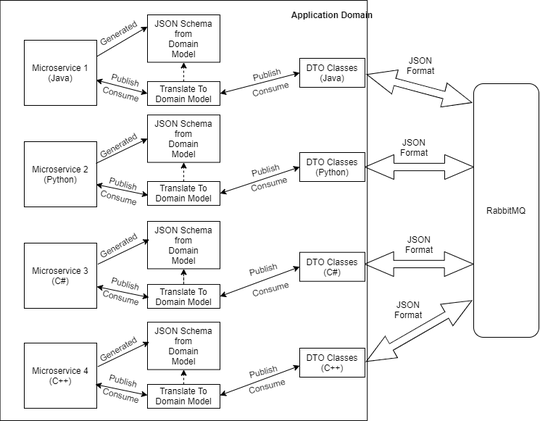

One possible solution here may be to create JSON schema files based on the domain model directly. Though this does not obviate the need for a translation layer, it would make it short enough to maintain. The new architecture is shown below.

The main problem here is, this is not an "API-first" approach. Two microservices having the same domain class might differ in meaning, eg. Person(age, height, address) and Person(strengths, weakness, insurities). In this case, a translation is not possible.

One possibility here is to somehow share the JSON schema across all the microservices, using a shared configuration service(like Netflix Archaius).

My questions are

- Is such an architecture feasible?

- If yes, how can I share JSON files(probably using a shared configuration)?

- If no, what alternative approach should I use?