I have a grid-based puzzle game, that is based on states. I need an algorithm that can find a solution for each game level. A game level starts in a specific state and ends in a unique, well-known state, so I need to find a path to walk from the start state to the end state.

Each object in the game grid can be moved in 4 directions and only stops when it collides with another object; and each move represents a unique, well-defined state. The game level is solved when all objects are positioned in their pre-specified locations, which is the puzzle part of the game. The game rules are extremely simple, but finding the solution for a level seems very hard.

Specifications of the problem

- Each state cannot be randomly predicted or generated. It must derive from (be a child of) another previous state.

- There is no effective way to measure how close we are to the goal state. We only know we reached the end when we find it.

- There can be too many possible states. In average, there are about 32^100 possible states per each game level.

- Many of the states, have derived states that are also their ancestral states. For example, we can get stuck on a cycle that never ends.

- We cannot traverse the states from the goal to the start state — i.e. we can't walk back.

- Many of the states can lead to a dead end, where it isn't possible to reach the end state.

- The number of states per level is limited — i.e. the number of object moves per game level is limited, so the found solution must be within this limit.

What I have tried

- I tried a brute force tree-based custom algorithm, but the possible states are too many. This algorithm works well for the simpler game levels, and it can find the shortest path between the two states. However, for complex game levels (which are most of the levels), this algorithm doesn't work, because it's brute force.

- I tried genetic algorithms. Although there is no effective way of measuring how close are we to the goal state, there is some score that can be obtained when certain objects are moved in certain ways. Higher score means we are closer to the end state, but can also get us in a dead end, which is what happens most times, as game levels are designed on purpose for that. I used this score as a measure for fitness. The genetic algorithm works more or less good for simple game levels, but it isn't reliable in most of the complex ones. Also, due to the nature of the problem, there is no way I can think of to implement crossover; and mutation is very hard to implement as well.

- I looked at (but not tried) some path-finding algorithms, like A*, but they rely on a "how close are we to the goal?" property, which this problem doesn't really have.

So, in the face of a problem like this, is there an algorithm that is a good candidate for finding solutions for these game levels? I guess Deep Learning approaches would work well for this, but that would be too much for this game, as hardware resources are somewhat limited.

EDIT 1 — An example 3rd party game

The game I'm making is, in concept, similar to this game. This game is based on a game called Q, which I only know from an old Sony Ericsson cell phone — one of the first cell phone models that had a color screen. The game I'm sharing as example, that is in Microsoft Store, is the only replica I could find; I couldn't find any online version, sorry about that. The game I'm working on has the same rules, but has additional objects that add different rules, to make the game more interesting (like objects that make balls stop, objects that make balls change direction, etc.).

If you play it, you may notice a human solves it using mostly reason, because each level has different geometries for the walls, some balls need to be put in their slots in some order, for the level to be possible to be solved, etc. With additional objects that add more complexity, solving the levels requires the human to understand some key ingredient specific to that level. For example, sometimes a ball is stuck in some place, and the key may be using one of the other balls together to take it out of there. There are some basic strategies that are helpful for most levels, but they alone cannot solve the levels. The most simple levels are pretty straightforward to solve, but there are ones (usually the most interesting) that require the human to be smart and use reason and some creativity to put all the balls in their respective slots.

Unfortunately, the game I'm working on is a work in progress, and is not yet functional on the visual part, so I can't yet use it as an example.

EDIT 2 — Some example game levels

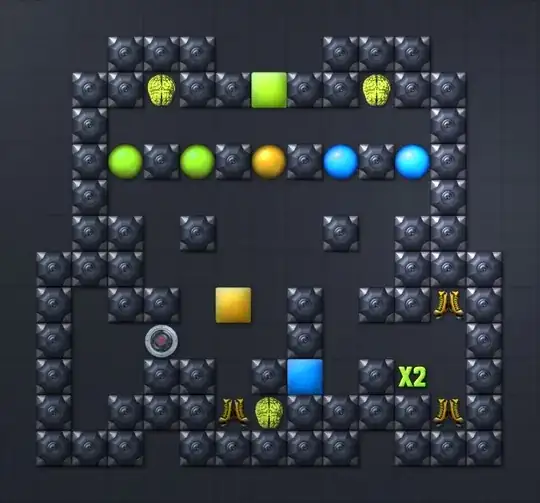

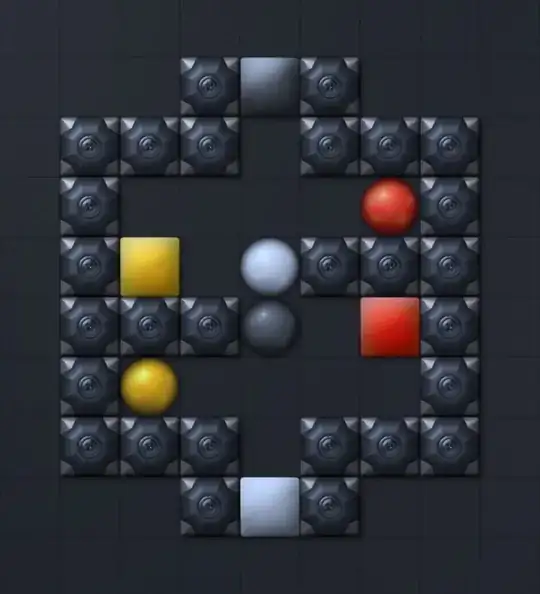

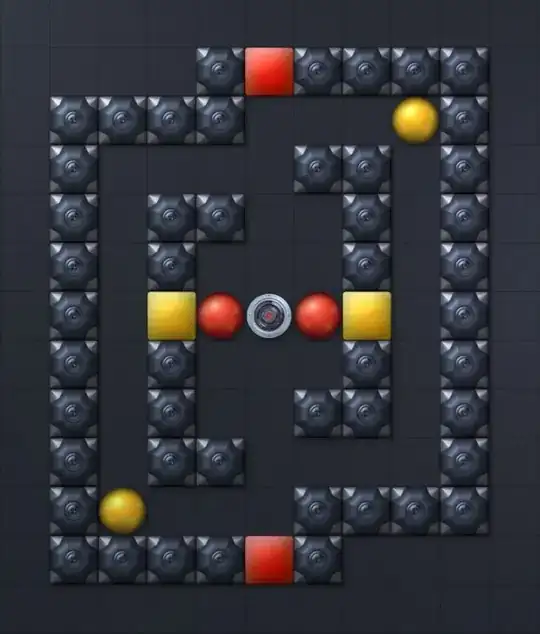

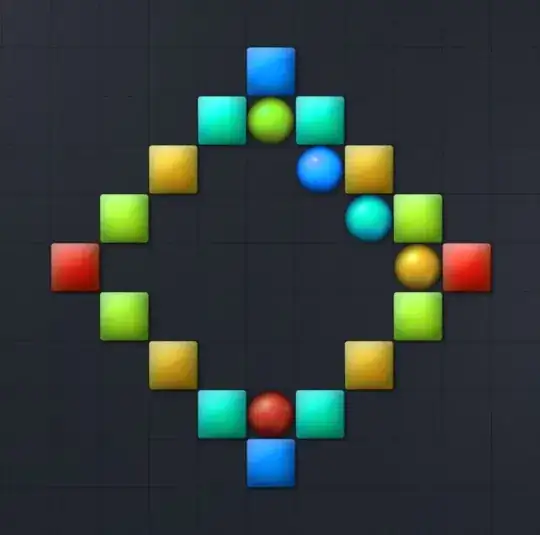

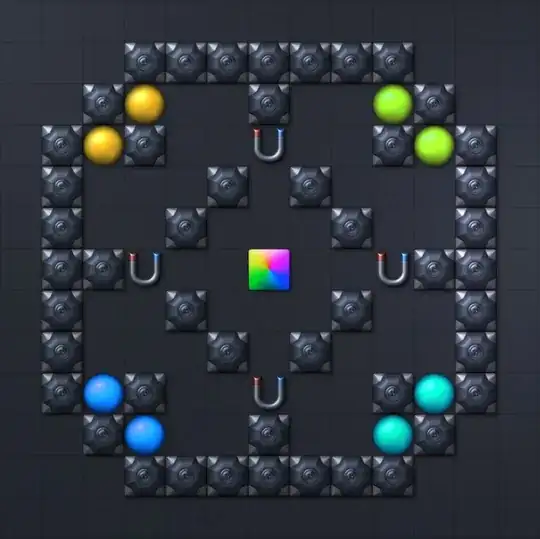

I managed to produce some pictures of some example levels for the game. They are the following, in order of hardness (well, more or less):

This level is fairly simple. Balls can be potted inside the squares with matching color, just like the 3rd party example game above.

This level is not very hard, but the mine in the center makes it tricky. Mines are objects you should not move over them, or else you lose the game.

This level is tricky, because balls are stuck within the geometry of the level, and avoiding potting balls is key. To solve this level, a lot of collaboration between all balls is necessary.

This level is limited to a maximum of 60 moves. That is what makes it a bit harder. The magnets make balls stop when moved through, but they are consumed (i.e. one time use). The square in the center is an Iris slot — any ball can be potted inside it. The key for solving this level within the maximum number of moves is to choose ball routes wisely.

This is the hardest level in these examples. The boots (a pickable item), once consumed, add 10 extra moves to the moves limit. The three boot items, add 30 extra moves in total. To solve the level you need to consume them, or else you run out of moves. But going for the boots will also spend some moves as well, so it requires a careful strategy. The little brains and the X2 are just optional pickable items that give some score.