I think the biggest bits you need to solve for are understanding the individual nodes in your graph more deeply.

Load-balancers tend to regularly poll the related nodes to determine their load state.

Typically a load-balancer is a configurable piece of software. The configuration you provide it will determine when to start targeting other nodes in your configured resource stack. Whether they be containers, virtual machines, physical machines, or some other esoteric variant.

Those rules tie into the horizontal scaling. Typically the scaling rules of the system tend to go hand-in-hand with the load-balancer. After all, you'd need them to be similar, or once the load-balancer says 'oh, this node is too busy', it won't have another node to forward the request to. Should that configuration occur, the existing nodes would be loaded until they flat-line.

From a logical standpoint, having every WebSocket server (WSS for short) queued up into every possible topic is impossible as it is constrained by the limits of the hardware.

The connection, or socket, received for a given WSS identifies the client as part of the original transaction. Traits from that client's identity indicate the specific client's interests, such as what topics to subscribe to.

Implementation Scenario 1

As a client makes a request, they are registered with a given WSS for that connection.

Most likely what you'd have is some form of broad-based push notification from the PubSub to the WSS layer to say 'Something event has occurred.' Only when the event is received from the PubSub, would the WSS look deeper. Then a targeted query to the PubSub could be crafted from there. You'd use the 'long lived' connections to comprehend what kind of query to write. Each long-lived connection represents a specific client. That client ties to the topics that are of interest.

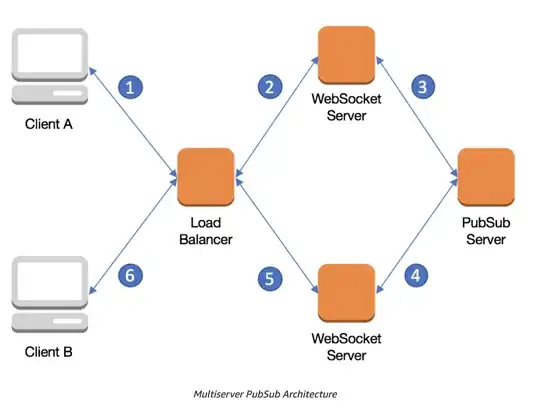

For instance, let's say Client A sent a message.

The WSS pointed to by 2-3 would push that to PubSub 3-4.

The WSS pointed to by 4-5 would receive a broad-based 'Changed' event.

WSS 4-5 would then ask the PubSub if anything changed for Client B's interests.

If PubSub 3-4 says no, you'd immediately end inquiry there.

Now let's step back. WSS 2-3 and 4-5 each support 500 clients. Client 1 sends a message. WSS 2-3 and 4-5 both receive their push notification of 'Something changed.' WSS 2-3 and 4-5 would send a request with the relevant client IDs.

The PubSub would send a response targeted to the relevant clients. Most likely to reduce the chattiness of the response, it would wrap multiple event notifications up into one. Why? If say 50 of the clients subscribed to the same topic, why would you repeat the same data 49 additional times?

After this, the relevant WSS sends the notifications to the appropriate clients.

Implementation Scenario 2

As a client makes a request, they are registered with a given WSS for that connection.

The WSS for that client sends a registration event to PubSub 3-4. PubSub 3-4 needs to keep track of all of these separate WSS/Client pairs. Every event sent to PubSub 3-4 needs to check those pairings and send events accordingly.

This puts a heavier impact on PubSub 3-4, and may even require you to have vertical scaling implemented for it.

Client A sends a message. WSS 2-3 sends the event to PubSub. PubSub interprets the message and additionally pulls in context related to what clients are subscribed. Then parses the list of WSS targets to receive the push notification and sends the notification to WSS 4-5

WSS 4-5 receives a push notification for the common topic, and forwards that to Client B.

Taking a step back, WSS 2-3 and 4-5 each support 500 clients. Client 1 from 2-3 sends a message. 50 clients subscribe to the same topic. PubSub 3-4 parses the interests and sends the relevant push notifications.

After this, the relevant WSS sends the notifications to the appropriate clients.

Comments and Follow-Up questions

Both implementation scenarios have their benefits and drawbacks.

Now a question for you. When the load drops, and a horizontal contraction occurs, how does the load-balancer reallocate the open sockets from the dropped WSS? How is that change propagated down the line?