I've written an implementation of the UCT1 Monte Carlo Tree Search algorithm for selecting moves in a two-player game. In the future, I'd like to expand this implementation to use more advanced tree search techniques like RAVE to be able to search more efficiently. I'm trying to figure out how to write unit tests for this kind of highly complex "black box" code.

The basic idea of monte carlo search is to play thousands of random games and select promising search nodes based on previous game outcomes. The basic pseudocode for tree search (from "A Survey of Monte Carlo Tree Search Methods" by Browne et al.) would be:

function UCTSEARCH(s₀)

create root node v₀ with state s₀

while within computational budget do

v₁ ← TREEPOLICY(v₀)

∆ ← DEFAULTPOLICY(s(v₁))

BACKUP(v₁, ∆)

return (BESTCHILD(v₀, 0))

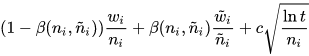

Using a Tree Policy to find a node in the tree which has not previously been explored, a Default Policy to score that node, e.g. by playing random games until termination, and a backpropagation step to apply reward values to parent nodes. Various algorithms exist to modify each of these steps, e.g. the selection function for RAVE (per wikipedia) maximizes

I'm finding it very difficult to write unit tests for code of this nature. From the perspective of a caller of this function, its behavior is opaque and non-deterministic, since it relies on random number generation. I also want to expand and modify the algorithm in the future, meaning all the implementation details are likely to change over time.

What strategy would you use to write unit tests for an algorithm like this?