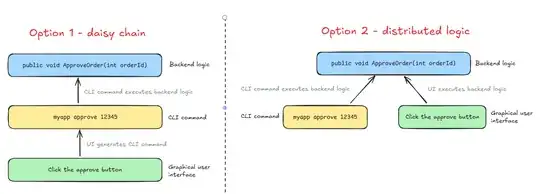

Sorry if the title sounds too broad, I'll try to clarify. I am trying to understand the structure of applications that have a GUI, but any action can also be run from a CLI (either integrated in the GUI, like AutoCAD (reference picture), or simply by launching the entire application in CLI, circumventing the GUI altogether). Having worked with several such programs, I noticed that any manipulation of GUI elements automatically generates a command that shows up in the CLI, which implies that GUI inputs (for example textboxes) are not binded to some underlying data, but instead write values through arguments of CLI commands to modify that underlying data. I assume that reading data works in the same way (through data retrieval commands).

This seems like a very different approach from the modern binding-based UI approach (like typically done with WPF) that seems to be very popular nowadays. It seems that GUI being a runner for CLI commands would make it more difficult to build the GUI, but having an integrated CLI (like in that AutoCAD example) seems like a great convenience for the user. But I could be wrong, maybe these two approaches are not at odds.

My question is, can anyone explain how such applications are structured, and what are the best practices on designing them? Are my assumptions in the ballpark? I tried researching this, but I probably don't know what this kind of design called, so I didn't find much. Any links, resources or just a clarification of what I need to search for would be greatly appreciated.